Data Normalization in R

Data Normalization in R, data normalization is a vital technique to understand in data pre-processing, and you’ll learn about it in this tutorial.

Different numerical data columns may have vastly different ranges, making a direct comparison useless.

Normalization is a technique for bringing all data into a comparable range so that comparisons are more relevant.

Timeseries analysis in R » Decomposition, & Forecasting »

Data Normalization in R

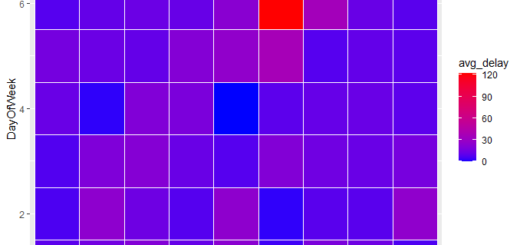

Let’s assume, “ArrlineDelay” variable ranges from -73 to 682 when you look at the dataset.

To reduce the impact of outliers, you might choose to standardize these variables. Normalization allows for a fair comparison of the various features, as well as ensuring that they have the same influence for computational reasons.

It can also simplify some statistical analyses. Here’s another example to help you see why normalization is so crucial.

How to clean the datasets in R? » janitor Data Cleansing »

The following table illustrates a dataset with two variables: “age” and “income,” where “age” is a number between 0 to 100 and “income” is a number between 0 to 500,000.

| Age | Income |

| 20 | 100000 |

| 30 | 20000 |

| 40 | 500000 |

“Income” is 1,000 times larger than “age,” ranging from 20,000 to 500,000 dollars. As a result, the ranges of these two characteristics are vastly different.

The attribute “income” will intrinsically impact the outcome more when doing subsequent analysis, such as linear regression, due to its bigger value, but this does not necessarily mean it is more useful as a predictor.

As a result of the nature of the data, the linear regression model favors “income” over “age”.

You can avoid this by normalizing these two variables to values between 0 and 1.

| Age | Income |

| 0.2 | 0.2 |

| 0.3 | 0.04 |

| 0.4 | 1 |

Both variables now have a similar influence on the models you’ll develop later after normalization.

How to run R code in PyCharm? » R & PyCharm »

Data can be normalized in a variety of ways.

1. Simple Feature Scaling

The “simple feature scaling” method divides each value by the feature’s maximum value. As a result, the new values range from 0 to 1.

2. Min-Max

“Min-Max” takes each value, subtracts X old from the feature’s minimum value, and divides it by the feature’s range. The new values are again in the range of 0 to 1.

3. Z-Score

“Z-score” is also known as the “standard score.” In this case, you need to remove the Mu, which is the feature’s average, from each value before dividing it by the standard deviation (sigma).

In most cases, the resulting values are close to zero and normally range from -3 to +3, however, they can be higher or lower in unusual cases.

Now let’s get down to business and do some r programming.

How to Remove Duplicates in R with Example »

Assume if we have a column variable “ArrDelay”, and want to normalize the variable based on the above-mentioned methods.

This example employs the simple feature scaling method, which divides each value in the “ArrDelay” feature by the feature’s maximum value.

You can do this with just one line of code if you use the max() method.

Featurescaling<-ArrDelay/max(ArrDelay)

The Min-Max approach is used on the “length” feature in this example.

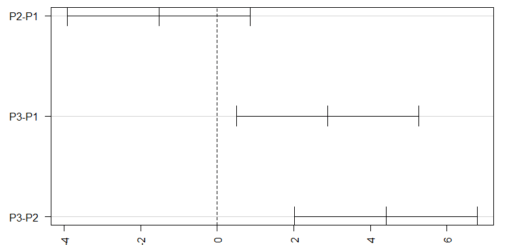

How to Perform Tukey HSD Test in R » Quick Guide »

It multiplies each value by the feature’s minimum, then divides it by the feature’s range: the max minus the min.

Minimax<-(ArrDelay-min(ArrDelay))/(max(ArrDelay)—min(ArrDelay))

Finally, to normalize the values, this example uses the Z-score method on the “length” characteristic.

The “length” characteristic is subjected to the mean() and sd() (or standard deviation) methods.

How to Perform Dunnett’s Test in R » Post-hoc Test »

The mean() function returns the average value of the dataset’s characteristics, while the sd() function returns the dataset’s standard deviation.

zscale<-(ArrDelay-mean(ArrDelay))/sd(ArrDelay)

You learned in this tutorial that normalization is a technique for bringing all data into a comparable range so that comparisons are more relevant.

You learned about a handful of the most prevalent approaches for normalizing data: simple feature scaling, Min-Max, and Z-score.

How to find z score in R-Easy Calculation-Quick Guide »