How to Split data into train and test in R

Split data into train and test in r, It is critical to partition the data into training and testing sets when using supervised learning algorithms such as Linear Regression, Random Forest, Naïve Bayes classification, Logistic Regression, and Decision Trees etc.

We first train the model using the training dataset’s observations and then use it to predict from the testing dataset.

Splitting helps to avoid overfitting and to improve the training dataset accuracy.

Finally, we need a model that can perform well on unknown data, therefore we utilize test data to test the trained model’s performance at the end.

Decision Tree R Code » Classification & Regression »

First, we need to load some sample data for illustration purposes.

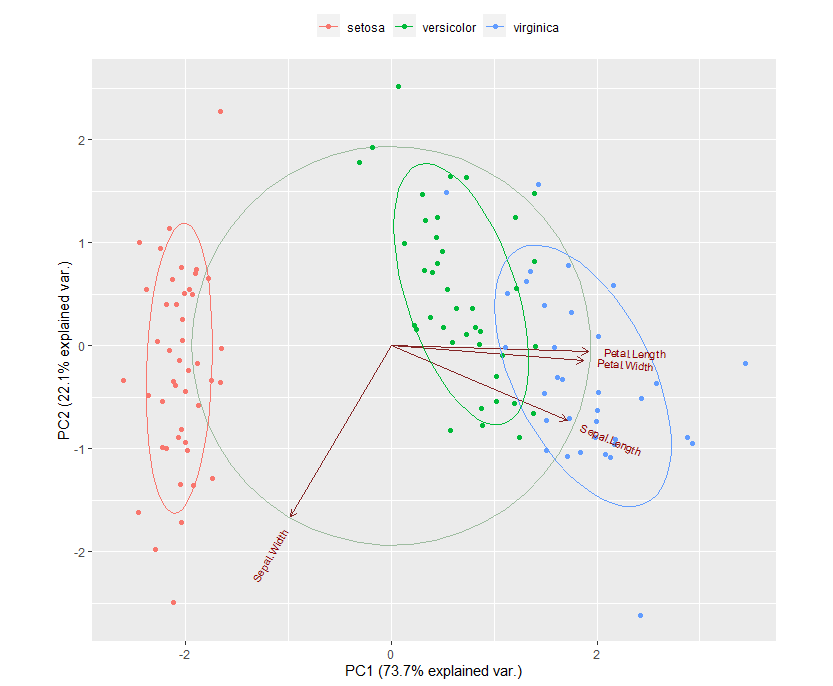

We can make use of the iris data set for the same.

For the same output, we will utilize set.seed function.

set.seed(123) data <- iris

head(data)

Sepal.Length Sepal.Width Petal.Length Petal.Width Species 1 5.1 3.5 1.4 0.2 setosa 2 4.9 3.0 1.4 0.2 setosa 3 4.7 3.2 1.3 0.2 setosa 4 4.6 3.1 1.5 0.2 setosa 5 5.0 3.6 1.4 0.2 setosa 6 5.4 3.9 1.7 0.4 setosa

dim(data) [1] 150 5

The format of our exemplifying data is shown in the preceding RStudio console output.

It has four numeric columns, Sepal.Length, Sepal.Width, Petal.Length, Petal.Width, and 150 rows.

Now will try to split these data sets.

Example: split data into train and test in r

Will show you how to use the sample function in R to divide a data frame into training and test data.

Cluster Analysis in R » Unsupervised Approach »

To begin, we’ll create a fake indicator to indicate whether a row is in the training or testing data set.

In an ideal world, we’d have 70% training data and 30% testing data, which would provide the highest level of accuracy.

split1<- sample(c(rep(0, 0.7 * nrow(data)), rep(1, 0.3 * nrow(data)))) split1

[1] 1 1 0 0 1 0 1 0 0 0 0 0 1 1 1 1 0 0 0 0 1 0 0 1 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 1 0 0 0 1 [48] 0 1 1 0 1 0 1 1 0 0 0 0 0 1 0 0 0 0 0 0 0 1 1 0 0 0 0 0 0 0 0 1 0 0 0 1 0 0 0 0 1 1 0 0 0 0 0 [95] 0 1 0 1 1 0 0 0 0 1 0 0 0 1 1 0 0 0 1 0 0 1 0 0 0 1 0 1 0 0 0 0 0 1 1 0 0 1 0 0 0 0 0 0 1 0 0 [142] 1 0 1 0 0 0 1 1 1

You must first create a seed before creating your training and test sets. This is the random number generator in R.

Timeseries analysis in R » Decomposition, & Forecasting »

The main benefit of using seed is that you may get the same sequence of random numbers every time you use the random number generator with the same seed.

Now we can cross verify our split1 data.

table(split1)

0 1 105 45

As you can see from the split1 data set, 105 observations assign to the training data (i.e. 0), and 45 observations assign to the testing data (i.e. 45). (i.e. 1).

Now we can create train data set and split the data set separately.

Logistic Regression R- Tutorial » Detailed Overview »

train <- data[split1 == 0, ]

Let’s have a look at the first rows of our training data:

head(train)

Sepal.Length Sepal.Width Petal.Length Petal.Width Species 3 4.7 3.2 1.3 0.2 setosa 4 4.6 3.1 1.5 0.2 setosa 6 5.4 3.9 1.7 0.4 setosa 8 5.0 3.4 1.5 0.2 setosa 9 4.4 2.9 1.4 0.2 setosa 10 4.9 3.1 1.5 0.1 setosa

dim(train) [1] 105 5

Rows 3, 4, 6, 8, 9, 10 were assigned to the training data, as seen in the previous RStudio console output.

To define our test data, we may do the same thing.

test <- data[split1== 1, ]

Let’s additionally print the data set’s head

head(test)

Sepal.Length Sepal.Width Petal.Length Petal.Width Species 1 5.1 3.5 1.4 0.2 setosa 2 4.9 3.0 1.4 0.2 setosa 5 5.0 3.6 1.4 0.2 setosa 7 4.6 3.4 1.4 0.3 setosa 13 4.8 3.0 1.4 0.1 setosa 14 4.3 3.0 1.1 0.1 setosa

Now we can check the dim (test)

[1] 45 5

It appears to be in good condition. The above data sets were able to utilize to run statistical procedures such as machine learning algorithms on them.

Please note for the better model accuracy need to avoid class imbalance issues.

K Nearest Neighbor Algorithm in Machine Learning » finnstats

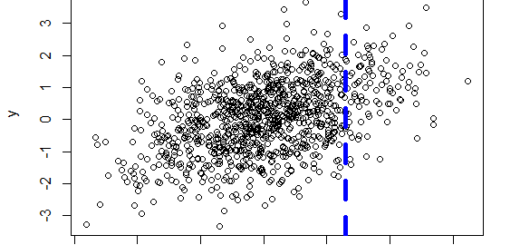

Many data sets do not consist of independent data, but exhibit grouping structure, spatial/temporal/phylogenetic autocorrelation. In these cases, the above-proposed random splitting will yield optimistic estimates of a model’s predictive performance.

There’ve been a few papers recently reviewing (Roberts et al. 2017 Ecography) or demonstrating the stark effect (Ploton et al 2020 Nature Communication) such erroneous random splitting can have.

Conflict of Interests: I am a co-author on both of these papers.

Thanks, Carsten for the valuable information.