Importance of Data Cleaning in Machine Learning

Importance of Data Cleaning in Machine Learning, This post will show you how to perform data cleaning to get your dataset in top condition.

Data cleansing is essential because, regardless of how sophisticated your ML algorithm is, you can’t obtain good results from bad data.

Depending on the dataset, different procedures and methods will be used to clean the data.

As a result, no single guide could possibly address every situation you might encounter.

But this manual offers a consistent, dependable basis for getting started.

Let’s get going!

Assess Performance of the Classification Model »

Better Data better than Fancier Algorithms

One of those things that everyone performs but barely discusses is data cleaning. It’s true that this aspect of machine learning isn’t the “sexiest.” And no, there aren’t any undiscovered traps or secrets.

But data cleaning done correctly can make or ruin your project. This stage typically takes up a significant percentage of the work of professional data scientists.

In other words, you receive what you put in. Please keep in mind this point even if you forget everything else from this post.

Even simple algorithms can extract stunning insights from the data when it has been properly cleaned.

It goes without saying that various data kinds will require various methods of cleansing.

The methodical approach outlined in this course can, however, always be used as a solid place to start.

Training and Testing Data in Machine Learning »

The takeaway from Unwanted Observations

In order to clean up your data, you must first remove any unnecessary observations from your dataset. You should specifically eliminate any redundant or pointless observations.

Duplicate Observations

It’s crucial to eliminate duplicate observations since you don’t want them to skew your findings or models. The most common time for duplicates to occur is during data collecting, such as when you:

- Combining data from many sources

- Scrape data

- Obtain information from clients or other departments

Irrelevant Observations

Observations that don’t directly relate to the problem you’re seeking to address are considered irrelevant.

For instance, you wouldn’t include observations for apartments in a model that was intended just for single-family homes.

Reviewing the charts you created for exploratory analysis is a fantastic idea at this time. If there are any classes that shouldn’t be there, you can check the distribution charts for categorical features to identify them.

Before adding technical features, make sure there are no irrelevant observations. This will spare you a lot of trouble later.

Surprising Things You Can Do With R »

Fix Structural Errors

The third category of data cleaning is the correction of structural flaws. During measurement, data transfer, or other instances of “poor housekeeping,” structural mistakes might occur.

For instance, you can look for errors in capitalization or typos. You can check your bar plots by looking at categorical features, which are primarily a worry.

Here’s an example:

Structural Errors Before

Although they are listed as two separate classes, “composition” and “Composition” both refer to the same topic.

Likewise for “asphalt” and “Asphalt.”

‘Shake Shingle’ and’shake-shingle’ are both equivalent.

Last but not least, “asphalt, shake-shingle” might certainly just be combined with “Shake Shingle”

The class distribution looks considerably clearer when the errors and irregular capitalization have been replaced:

Fix Structural Errors After

Finally, look for classes that have incorrect labels or different classes that should actually be the same. Although they are not included in the dataset above, be aware of abbreviations like.

For instance, you should combine the classes “N/A” and “Not Applicable” if they appear as two different classes.

For instance, the classes “IT” and “information technology” should be combined.

Which programming language should I learn? »

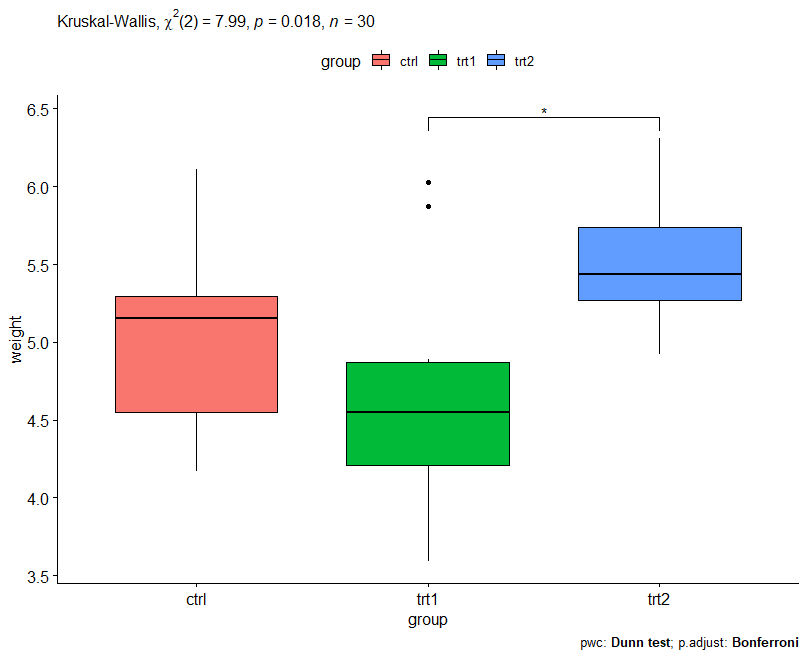

Removing Unwanted Outliers

With some models, outliers can lead to issues. For instance, decision tree models are more resistant to outliers than linear regression models.

In general, it will improve the performance of your model if you delete an outlier if you have a good cause to.

Outsiders, however, are presumed innocent unless proven guilty. An outlier should never be eliminated merely because it is a “large number.”

That large sum of information might be quite instructive for your model.

You must have a valid justification for deleting an outlier, such as suspicious readings that are unlikely to be actual data, and we cannot emphasize this enough.

Handle Missing Data

A deceptively challenging problem in applied machine learning is missing data.

Just so everyone is on the same page, missing values in your dataset cannot just be ignored.

For the very practical reason that most algorithms do not accept missing values, you must handle them in some way.

Unfortunately, the two approaches that are most frequently advocated for handling missing data are actually terrible. These techniques are frequently ineffectual, at best.

They could, at worst, completely ruin your outcomes.

Best Books for Data Analytics »

As follows:

- Rremoving observations with values missing

- Using additional observations to infer the missing values

Dropping missing values is not ideal because you also lose information when you drop observations. The absence of the value itself might be instructive.

Additionally, even when some elements are absent, you frequently need to make predictions based on new facts in the actual world!

Since the value was initially absent but subsequently filled in, imputing missing values is not ideal.

No matter how advanced your imputation process is, this also results in a loss of information.

Even if you construct an imputation model, all you are doing is strengthening the patterns that other characteristics have already provided.

Missing Data Puzzle Pieces

Data gaps resemble missing pieces of a puzzle. Dropping it equates to acting as though the puzzle slot doesn’t exist.

In summary, “missingness” is usually always informative in itself, therefore you should always tell your algorithm that a value was initially missing.

How then can you achieve that?

Simply labeling missing data for categorical features as “Missing” is the best course of action!

In essence, you’re creating a new class for the feature. The algorithm is informed by this that the value was missing.

Additionally, this avoids the technically required absence of any missing values.

How to Rotate Axis Labels in ggplot2? »

Missing Numeric Data

You must mark and fill in the values for any missing numeric data.

Use a missingness indication variable to mark the observation.

Then, in order to adhere to the technical criterion of having no missing values, replace the initial missing value with 0.

By employing this flagging and filling method, as opposed to only filling it in with the mean / predictive mean, you are effectively letting the algorithm estimate the ideal constant for missingness.

You’ll have a strong dataset that avoids many of the most common mistakes after properly cleaning the data.

Don’t rush this process because it can truly save you from a lot of hassles in the future.

The Machine Learning Workflow’s Data Cleaning stage is now complete.