Bagging in Machine Learning Guide

Bagging in Machine Learning, when the link between a group of predictor variables and a response variable is linear, we can model the relationship using methods like multiple linear regression.

When the link is more complex, however, we must frequently use non-linear approaches.

Classification and regression trees (commonly abbreviated CART) are a method for predicting the value of a response variable that uses a set of predictor variables to generate decision trees.

Sample and Population Variance in R » finnstats

The disadvantage of CART models is that they have a lot of volatility. That is, if a dataset is split into two halves and a decision tree is applied to each half, the outcomes could be substantially different.

Bagging, also known as bootstrap aggregating, is one strategy for reducing the variance of CART models.

What is the definition of Bagging in Machine Learning?

The model for a single decision tree is built using only one training dataset.

Bagging, on the other hand, employs the following strategy:

1. From the original dataset, take x bootstrapped samples.

A bootstrapped sample is a subset of the original dataset where the observations are replaced with new ones.

2. For each bootstrapped sample, create a decision tree.

3. Create a final model by averaging the forecasts of each tree.

We take the average of the X trees’ predictions for regression trees.

Bubble Chart in R-ggplot & Plotly » (Code & Tutorial) » finnstats

We use the most frequently occurring prediction made by the X trees for categorization trees.

Bagging may be used with any machine learning method, but it’s especially effective for decision trees because they have a high variance intrinsically, which bagging can drastically reduce, resulting in lower test error.

We develop X individual trees deeply without clipping them to apply bagging to decision trees. As a result, individual trees have a lot of variety but little bias.

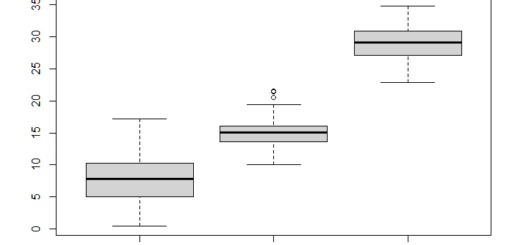

The variance can then be reduced by taking the average forecasts from these trees. In reality, optimal performance is achieved with 50 to 500 trees, but a final model might be produced with thousands of trees.

Timeseries analysis in R » Decomposition, & Forecasting » finnstats

Keep in mind that fitting more trees will necessitate more processing power, which may or may not be an issue depending on the dataset’s size.

Out-of-Bag Error Estimation

It turns out that we can determine a bagged model’s test error without using k-fold cross-validation.

The reason for this is that each bootstrapped sample contains approximately 2/3 of the original dataset’s observations. Out-of-bag (OOB) observations are the remaining 1/3 of the observations that were not used to fit the bagged tree.

The average forecast from each of the trees in which that observation was OOB can be used to predict the value for the ith observation in the original dataset.

Using this method, we can make a prediction for all n observations in the original dataset and generate an error rate, which is a reliable estimate of the test error.

Exploratory Data Analysis (EDA) » Overview » finnstats

The advantage of adopting this method to estimate the test error over k-fold cross-validation is that it is significantly faster, especially when the dataset is large.

One of the advantages of decision trees is that they are simple to understand and visualize.

We can no longer analyze or visualize an individual tree when we employ bagging because the final bagged model is the product of averaging numerous separate trees.

We gain accuracy in prediction at the cost of interpretability. However, by computing the total drop in RSS (residual sum of squares) owing to the split over a specific predictor, averaged across all X trees, we can still identify the value of each predictor variable.

The more significant the predictor, the higher the value. Similarly, we may determine the total drop in the Gini Index due to the split over a specific predictor, averaged across all X trees, for classification models.

Cross Validation in R with Example » finnstats

The more significant the predictor, the higher the value.

So, while we can’t precisely interpret a final bagged model, we can get a sense of how important each predictor variable is in predicting the answer.

Going Beyond Bagging

When compared to a single decision tree, the benefit of bagging is that it usually results in a lower test error rate.

The disadvantage is that if there is a very strong predictor in the dataset, the predictions from the collection of bagged trees can be strongly correlated.

In this situation, this predictor will be used for the initial split by most or all of the bagged trees, resulting in trees that are similar to one another and have highly correlated predictions.

Random forests, which use a similar process to bagging but are capable of producing decor-related trees, which frequently leads to reduced test error rates, are one way to get around this issue.

Subscribe to our newsletter!