XGBoost in R for Enhanced Predictive Modeling

XGBoost in R, Boosting is a powerful ensemble method that improves the performance of predictive models by combining multiple weak learners, typically decision trees, into a single strong model.

Among the many boosting techniques, XGBoost (Extreme Gradient Boosting) stands out as a superior choice due to its efficiency and flexibility.

XGBoost in R

In this comprehensive guide, we will explore how to use XGBoost in R, catering to both novices and experienced data scientists.

What Makes XGBoost Popular?

XGBoost has gained immense popularity in the data science community for several reasons:

- High Performance: It is optimized for speed and computational efficiency, making it ideal for handling large datasets.

- Regularization: XGBoost incorporates L1 and L2 regularization techniques to mitigate overfitting, enhancing the model’s predictive power.

- Cross-Validation Support: Built-in cross-validation functionality aids in accurate model evaluation and selection.

Getting Started with XGBoost in R

Installation and Loading the XGBoost Package

To begin, you need to install the XGBoost package in R. You can effortlessly do this using the command line:

# Install XGBoost package

install.packages("xgboost")

# Load the library

library(xgboost)Preparing the Data for XGBoost

In this tutorial, we will utilize the well-known Boston housing dataset available in the MASS package. The dataset comprises various features related to housing prices. Our objective is to train the model using 80% of the data and test it on the remaining 20%.

# Load the Boston dataset

data("Boston", package = "MASS")

# Splitting the data into training and testing sets

set.seed(123)

train_indices <- sample(1:nrow(Boston), 0.8 * nrow(Boston))

train_data <- Boston[train_indices, ]

test_data <- Boston[-train_indices, ]

# Separate features and target variable

train_matrix <- as.matrix(train_data[, -14])

train_label <- train_data$medv

test_matrix <- as.matrix(test_data[, -14])

test_label <- test_data$medv

# Create DMatrix objects for training and testing datasets

dtrain <- xgb.DMatrix(data = train_matrix, label = train_label)

dtest <- xgb.DMatrix(data = test_matrix, label = test_label)Training the XGBoost Model

With the data prepared, we can now proceed to train the XGBoost model. We’ll define essential hyperparameters and use the xgb.train() function to perform the training.

# Define model hyperparameters

params <- list(

booster = "gbtree",

objective = "reg:squarederror",

eval_metric = "rmse",

eta = 0.1,

max_depth = 6,

subsample = 0.8,

colsample_bytree = 0.8

)

# Train the XGBoost model

xgb_model <- xgb.train(params = params, data = dtrain, nrounds = 100, watchlist = list(train = dtrain))Making Predictions with XGBoost

Once we have trained the model, we can use it to make predictions on the test dataset. The predict() function provides the predicted values based on the trained model.

# Generate predictions on the test dataset

predictions <- predict(xgb_model, dtest)

# Display a few predictions

head(predictions)Evaluating the Model’s Performance

After making predictions, it’s crucial to assess the model’s performance. We can calculate the Mean Squared Error (MSE) and Root Mean Squared Error (RMSE) to evaluate how well the model performs.

# Calculate model performance

mse <- mean((test_label - predictions)^2)

rmse <- sqrt(mse)

cat("Mean Squared Error:", mse, "\n")

cat("Root Mean Squared Error:", rmse, "\n")Understanding Feature Importance

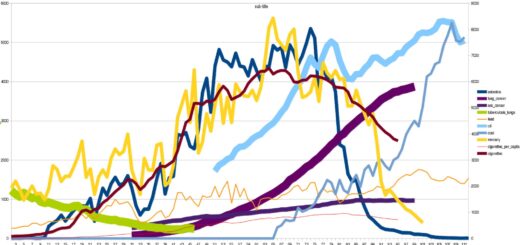

Feature importance reveals which variables contribute most to the predictions. XGBoost offers functionality to identify and visualize feature importance.

# Determine feature importance

importance <- xgb.importance(model = xgb_model)

# Plot feature importance for better visualization

xgb.plot.importance(importance)Hyperparameter Tuning for Optimal Performance

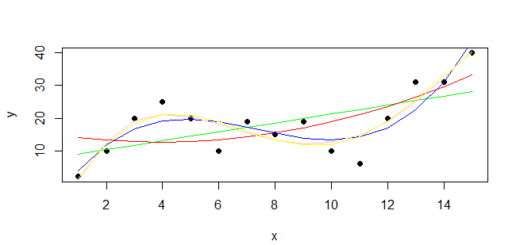

To enhance the model’s performance, hyperparameter tuning is essential. Utilizing techniques like grid search or random search can help identify the best parameters. The caret package in R simplifies this process.

# Setting up a parameter grid for hyperparameter tuning

param_grid <- expand.grid(

max_depth = c(3, 6, 10),

eta = c(0.01, 0.1, 0.3),

subsample = c(0.7, 0.8, 0.9),

colsample_bytree = c(0.7, 0.8, 0.9)

)

# Cross-validation to gauge performance

cv_results <- xgb.cv(

params = list(objective = "reg:squarederror", eval_metric = "error"),

data = train_matrix,

nrounds = 100,

nfold = 5,

showsd = TRUE,

stratified = TRUE

)Conclusion

In conclusion, XGBoost is an advanced boosting algorithm for both classification and regression tasks, offering enhanced predictive power and efficiency.

This article has guided you through the process of utilizing XGBoost in R, covering data preparation, training, evaluation, and hyperparameter tuning.

By mastering XGBoost, you equip yourself with a robust tool for your data analysis and predictive modeling tasks.

- Error-u-used-without-hex-digits-in-character-string-starting-cu

- Best Books For Deep Learning

- ANOVA and Regression Models in Statistics

- Effect Size in Data Analysis