NLP Technology N gram model in NLP

NLP Technology N gram model in NLP, The likelihood of the word sequence is calculated using language modeling. Applications for this modeling include speech recognition, spam filtering, and many more.

Automatic Language Recognition (NLP)

Artificial intelligence (AI) and linguistics have converged to become natural language processing (NLP). It is used to translate words or statements written in human languages for computers to understand.

NLP was created to make working with and interacting with computers simple and enjoyable.

NLP works better with users who lack the time to learn new machine languages because not all computer users can be well-versed in those particular languages.

Machine Learning Archives » Data Science Tutorials

Language is a system of rules and symbols. Information is communicated by combining various symbols. The set of guidelines tyrannizes them.

Natural Language Processing (NLP) is divided into two categories: Natural Language Understanding (NLU) and Natural Language Generation (NLG), which develops the tasks for NLU and NLG.

NLP Technology N gram model in NLP

Language modelings fall into the following categories:

Models for statistical language: In this modeling, probabilistic models are developed. The following word in a series is predicted by this probabilistic model.

N-gram language modeling, as an illustration. The input can be clarified using this modeling. They can be used to choose a likely solution. The probability theory is a foundation for this modeling. Gonna determine how likely something is to happen, use probability.

Neural language modeling: Neural language modeling outperforms conventional methods on difficult tasks like speech recognition and machine translation, both when used as standalone models and when incorporated into larger models.

Word embedding is one technique for accomplishing neural language modeling.

NLP N-gram modeling

In the modeling of NLP, an N-gram is a series of N words. Take a look at a statement for modeling example. “I enjoy watching documentaries and reading history books.” There is a single-word sequence in a one-gram or unigram.

Regarding the previous phrase, it might be expressed as “I,” “love,” “history,” “books,” “and,” “viewing,” or “documentaries” in one gram.

The two-word phrase “I love”, “love reading”, or “history books” is a two-gram or a bi-gram.

The three-word or tri-word sequences, such as “I adore reading,” “history books,” or “and watching documentaries,” are examples of the three-gram or tri-gram.

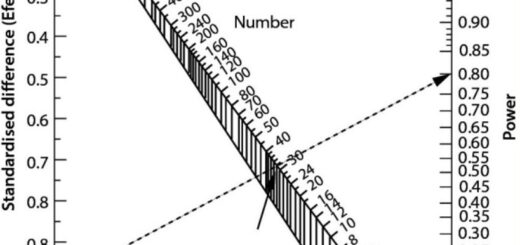

The N-gram modeling predicts the majority of words that can follow the sequences for N-1 words. The model is a probabilistic language model that was developed using a large body of text.

Applications like speech recognition and machine translation can benefit from this concept. There are various shortcomings in a straightforward model that can be addressed via smoothing, interpolation, and backoff.

Finding probability distributions across word sequences is the goal of the N-gram language model. Take the phrases “There was heavy rain” and “There was heavy flood” as examples.

Experience allows one to conclude that the first claim is accurate. According to the N-gram language model, “heavy rain” happens more frequently than “heavy flood.”

Bubble Chart in R-ggplot & Plotly » (Code & Tutorial) »

As a result, the first statement will be chosen by this model because it has a higher chance of happening. In the one-gram model, the model often depends on the word that appears frequently without taking into account the preceding words.

In 2-gram, the current word is only predicted using the prior word. Two previous words are taken into account in a 3-gram. The probabilities listed below are computed using the N-gram language model:

P (“There was heavy rain”) = P (“There”, “was”, “heavy”, “rain”) = P (“There”) P (“was” |“There”) P (“heavy”| “There was”) P (“rain” |“There was heavy”).

This is approximated using the bi-gram model because it is not practicable to calculate the conditional probability; instead, one uses “Markov Assumptions”.

P (“There was heavy rain”) ~ P (“There”) P (“was” |“'There”) P (“heavy” |“was”) P (“rain” |“heavy”)

N-gram Model in NLP applications

The input for voice recognition can be noisy. This noise may result in an incorrect speech-to-text conversion.

The N-gram language model uses probability knowledge to reduce the noise. In order to provide more grammatically correct sentences in the target and specified languages, this model is also utilized in machine translation.

The dictionary can be ineffective at times when trying to rectify spelling mistakes. For instance, the word “minutes” in the phrase “in around fifteen minutes” is improper even though it is listed in the dictionary as an acceptable term. This kind of mistake can be corrected using the N-gram language model.

Typically, the N-gram language model is at the word levels. Additionally, it is employed at the character level to perform stemming, which is the process of separating the root words from a suffix.

The languages can be categorized or distinguished between US and UK spellings by examining the N-gram model. The N-gram model is useful for a variety of tasks, such as sentiment analysis, word similarity detection, natural language production, and speech segmentation.

Experimental Design in Research | Quick Guide »

N-gram Model Limitations in NLP

The N-gram language model has certain drawbacks as well. The dearth of vocabulary words is a concern. While not used in training, these words are used during testing.

One method is to use the predetermined vocabulary and then change the training vocabulary terms to pseudowords. The bi-gram model fared better than the uni-gram model when used for sentiment analysis, however, the number of features was then doubled.

Better feature selection techniques are therefore required for scaling the N-gram model to bigger data sets or transitioning to higher-order models.

The long-distance context is inadequately represented by the N-gram model. It has been demonstrated that performance gains are constrained after every six grams.