Logistic Regression Machine Learning: Explanation

Logistic regression machine learning, So now we’re going to get a little bit more maths.

Let’s, for shorthand, write p of X for the probability that y is 1 given X. And we’re going to consider our simple model for predicting default, yes or no, using balance as one of the variables.

So single variable. So here’s the form of logistic regression. So that e is the scientific constant, the exponential value, and we raise e to the power of a linear model.

We’ve got a beta 0, which is the intercept, and beta 1 is the coefficient of x. And you see that appears in the numerator and in the denominator, but there’s 1 plus in the denominator.

So it’s a somewhat complicated expression, but you can see straight away that the values have to lie between 0 and 1.

Because in the numerator, e to anything is positive. And the denominator is bigger than the numerator, so it’s always got to be bigger than 0. And you can show that it’s got to be less than 1.

When beta 0 plus beta x gets very large, this approaches 1. So this is a special construct, a transformation of a linear model to guarantee that what we get out is a probability.

The logistic regression model is what it’s called. In fact, the term “logistic” is derived from the model’s transformation. As a result, we have a monotone transformation.

We get our linear model by taking the log of p of X over 1 minus p of X. The log odds or logit transformation of the probability is the name for this transformation.

And this is the model that we’ll be discussing right now. To summarise, we still have a linear model.

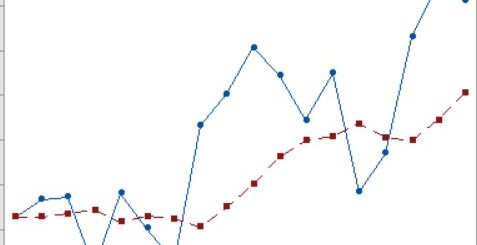

However, the probabilities are modeled on a non-linear scale. So, we’re back to our original image.

A logistic regression model created the image on the right, which explains why the probabilities are between 0 and 1.

So, now that we’ve put out the model, how can we estimate it using data?

You have a data series of observed 0s and 1s, and you have a model for the probabilities, which is how maximum likelihood works.

And there are parameters in that model. Beta 0 and beta 1 in this scenario. As a result, we can write down the probability of the observed data for any value of the parameters.

The probability of seen data is the likelihood of the observed string of 0s and 1s because each observation is supposed to be independent of the others.

So we put down the likelihood of a 1 whenever we see one, which is p of x. So, if xi is a 1 and observation I is a 1, the probability is p of xi, which we write down.

We simply multiply these probabilities because they’re all independent. These are the probabilities of a 0 (which is 1 less than the likelihood of a 1).

So this is the combined probability of the observed 0s and 1s sequence. And, of course, the parameters are involved.

As a result, the goal behind maximum likelihood is to choose the parameters in such a way that the probability is as great as possible.

Because, after all, you did notice the 0s and 1s sequence. So that’s the concept. It’s easy to say, but not necessarily easy to do.

But likely, we have programs that can do this. And for example, in R, we’ve got the glm function, which in a snap of a finger will fit this model for you and estimate the parameters.

And in this case, this is what it produced. The coefficient estimates were minus 10 for the intercept and 0.0055 for the slope for balance. That’s beta and beta 0.

So there are the coefficient estimates. It also gives you standard errors for each of the coefficient estimates.

It computes a Z-statistic and it also gives you P-values. I think I just realized something.

And you might be shocked by how tiny the slope is here. Nonetheless, it appeared to cause such a significant shift in the probabilities.

Take a look at the balancing units. They are expressed in US $. As a result, we received $2,000 and $2,500. As a result, the values of the coefficients, which are going to multiply that balance variable, take the units into account.

So it’s 0.005 per dollar, but it’s $5,000 per thousand dollars. So, when it comes to slopes, you must consider the units.

And so the Z-statistic, which is a kind of standardized slope, does that. And then if we look at the P-value, we see that the chance that actually this balance slope is 0 is very small.

Less than 0.001. So both intercept and slope are strongly significant in this case. How do I interpret that

The P-value for the intercept. The intercept’s largely to do with the preponderance of 0’s and 1’s in the data set.

And so that’s of less importance. That’s just inherent in the data set. It’s the slope that’s really important.

What do we do with this model?

We can predict probabilities. And so let’s suppose we take a person with a balance of $1,000.

Well, we can estimate their probability. So we just plug in the $1,000 into this formula over here.

And notice I’ve put hats on beta 0 and beta 1 to indicate that they’re now being estimated from the data. Put a hat on the probability. And yeah, we’ve plugged in the numbers and we use our

calculator or computer and we get 0.006. So somebody with a balance of $1,000 has a probability or 0.006 of defaulting.

In other words, pretty small. What if they’ve got a credit card balance of $2,000? That means they owe $2,000 rather than $1,000.

Well, if we go through the same procedure, now the probability has jumped up to 0.586. So it’s got much higher. And you can imagine if we put in $3,000, we’d get even higher.

Dealing Missing values in R – (datasciencetut.com)