Boosting in Machine Learning-Complete Guide

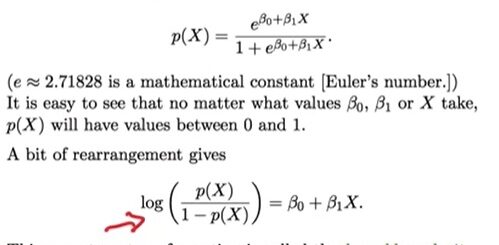

The majority of supervised machine learning algorithms rely on a single predictive model, such as linear regression, logistic regression, or ridge regression.

Bagging and random forests, on the other hand, generate a variety of models using repeated bootstrapped samples of the original dataset.

Class Imbalance-Handling Imbalanced Data in R » finnstats

The average of the predictions provided by the individual models is used to make predictions on new data.

Because they use the following approach, these strategies tend to enhance forecast accuracy above methods that merely use a single predictive model:

To begin, create separate models with a large variance and minimal bias (e.g. deeply grown decision trees).

To limit the variance, consider the average of the forecasts given by individual models.

Boosting is another strategy that seems to improve forecast accuracy even further.

datatable editor-DT package in R » Shiny, R Markdown & R » finnstats

What exactly is boosting?

Boosting is a technique that can be used in any model, but it is most commonly used with decision trees.

Boosting is based on a basic concept:

1. Create a weak model first.

A “weak” model is one that has a somewhat lower error rate than random guessing. In practice, this is usually just one or two splits in a decision tree.

2. Next, using the residuals from the previous model, create a new weak model.

In practice, we fit a new model that somewhat improves the overall error rate using the residuals from the previous model (i.e. the errors in our predictions).

3. Keep going till the k-fold cross-validation tells us to stop.

In practice, we use k-fold cross-validation to determine when the boosted model should be stopped developing.

What are the uses of Index Numbers? » Top 5 Uses» finnstats

Boosting in Machine Learning

We can start with a weak model and keep “boosting” its performance by successively generating new trees that improve on the prior tree’s performance until we reach a final model with high predicted accuracy using this strategy.

What Makes Boosting Effective?

Boosting turns out to be capable of producing some of the most strong models in machine learning.

Boosted models are employed as the go-to models in production in many industries since they outperform all other models.

Understanding a simple concept is the key to understanding why boosted models work so well.

How to Change Background Color in ggplot2 » finnstats

1. To begin with, boosted models construct a shaky decision tree with poor predictive accuracy. This decision tree is stated to have a high bias and low variance.

2. As boosted models improve prior decision trees sequentially, the total model is able to gradually reduce bias at each step without significantly increasing variance.

3. The final fitted model has a suitable amount of bias and variance, resulting in a model that can provide low test error rates on new data.

How to Measure Heteroscedasticity in Regression? » finnstats

The Benefits and Drawbacks of Boosting

Boosting has the obvious advantage of being able to build models with excellent predictive accuracy when compared to practically all other forms of models.

A fitted boosted model can be difficult to interpret, which is a possible disadvantage. While it may have a great deal of power to forecast the response values of incoming data, it’s tough to explain how it does so.

In practice, most data scientists and machine learning practitioners develop boosted models because they want to be able to forecast the response values of fresh data reliably. As a result, the fact that boosted models are difficult to interpret is rarely an issue.

In practice, many different kinds of algorithms are utilized for boosting, including.

Test For Randomness in R-How to check Dataset Randomness » finnstats

AdaBoost, XGBoost, CatBoost, LightGBM

AdaBoost

AdaBoost was the first truly successful boosting algorithm created specifically for binary classification.

Adaptive Boosting, or AdaBoost, is a prominent boosting strategy that combines several “weak classifiers” into a single “strong classifier.” Yoav Freund and Robert Schapire came up with the idea. They were also awarded the Gödel Prize in 2003 for their work.

Gradient Boosting

Gradient Boosting, like AdaBoost, operates by successively adding predictors to an ensemble, with each one correcting the one before it.

Gradient Boosting, on the other hand, instead of adjusting the weights for each incorrectly categorized observation at each iteration like AdaBoost does, seeks to fit the new predictor to the residual errors of the prior predictor.

eXtreme Gradient Boosting in R » Ultimate Guide » finnstats

XGBoost

1. During training, XGBoost provides Parallelization of tree construction using all of your CPU cores.

2. Using a cluster of devices, distributed computing may be used to train very large models.

3. For exceedingly huge datasets that don’t fit in memory, out-of-core computing is used.

4. Data structures and algorithms are cached to make the best use of hardware.

One of the above strategies may be better than the other depending on the size of your dataset and the processing capacity of your system.

Subscribe to our newsletter!