How to calculate Whites Test in R

Calculate White’s Test in R, The White test is a statistical test that determines whether the variance of errors in a regression model is constant, indicating homoscedasticity.

Halbert White proposed this test, as well as an estimator for heteroscedasticity-consistent standard errors, in 1980.

White’s test is used to determine whether or not a regression model contains heteroscedasticity.

Timeseries analysis in R » Decomposition, & Forecasting »

Heteroscedasticity in a regression model refers to the unequal scatter of residuals at different levels of a response variable, which contradicts one of the major assumptions of linear regression that the residuals are uniformly distributed at each level of the response variable.

The White test can be used to assess heteroscedasticity, specification error, or both.

This is a pure heteroscedasticity test if no cross-product terms are included in the White test technique.

It is a test of both heteroscedasticity and specification bias when cross-products are incorporated into the model.

This tutorial will show you how to run White’s test in R to see if heteroscedasticity is an issue in a given regression model.

Anderson-Darling Test in R (Quick Normality Check) »

Calculate Whites Test in R

In this example, we’ll use the built-in R dataset mtcars to fit a multiple linear regression model.

After fitting the model, we’ll use the bptest function from the lmtest library to run White’s test to see if heteroscedasticity exists.

Hypothesis:-

White’s test, null and alternative hypotheses mentioned as follows

Null (H0): Homoscedasticity is present.

Alternative (H1): Homoscedasticity is not present.

Step 1: Fit a regression model.

We will first fit a regression model with mpg as the response variable and disp, hp, and am as the explanatory variables.

data(mtcars) head(mtcars)

mpg cyl disp hp drat wt qsec vs am gear carb Mazda RX4 21.0 6 160 110 3.90 2.620 16.46 0 1 4 4 Mazda RX4 Wag 21.0 6 160 110 3.90 2.875 17.02 0 1 4 4 Datsun 710 22.8 4 108 93 3.85 2.320 18.61 1 1 4 1 Hornet 4 Drive 21.4 6 258 110 3.08 3.215 19.44 1 0 3 1 Hornet Sportabout 18.7 8 360 175 3.15 3.440 17.02 0 0 3 2 Valiant 18.1 6 225 105 2.76 3.460 20.22 1 0 3 1

Now we can fit the regression model, and the below-mentioned model we got from our old post.

lmmodel <- lm(mpg~disp+hp+am, data=mtcars) lmmodel

Call: lm(formula = mpg ~ disp + hp + am, data = mtcars) Coefficients: (Intercept) disp hp am 27.86623 -0.01406 -0.04140 3.79623 Let’s see the model summary

summary(lmmodel)

Call: lm(formula = mpg ~ disp + hp + am, data = mtcars) Residuals: Min 1Q Median 3Q Max -4.0480 -2.1992 -0.1692 1.6591 5.9285 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 27.866228 1.619677 17.205 <2e-16 *** disp -0.014064 0.009088 -1.547 0.1330 hp -0.041401 0.013660 -3.031 0.0052 ** am 3.796227 1.423995 2.666 0.0126 * --- Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Residual standard error: 2.842 on 28 degrees of freedom Multiple R-squared: 0.7992, Adjusted R-squared: 0.7777 F-statistic: 37.15 on 3 and 28 DF, p-value: 6.766e-10

Step 2: Perform White’s test.

We will use the following syntax to perform White’s test to see if heteroscedasticity exists.

First, we need to load lmtest library

library(lmtest)

Now we can perform White’s test in R

bptest(lmmodel, ~ disp*hp*am + I(disp^2) + I(hp^2)+ I(am^2), data = mtcars)

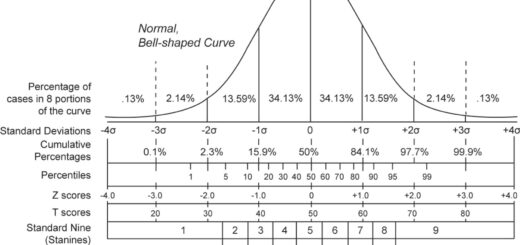

studentized Breusch-Pagan test data: lmmodel BP = 13.745, df = 9, p-value = 0.1317

Inference:-

The test statistic is 13.745, the degree of freedom is 9 and the corresponding p-value is 0.1317.

We cannot reject the null hypothesis because the p-value is greater than 0.05. We do not have enough evidence to conclude that heteroscedasticity exists in the regression model.

What Should You Do Next?

If you fail to reject the null hypothesis of White’s test, heteroscedasticity does not exist, and you can proceed to interpret the original regression output.

If you reject the null hypothesis, this indicates that heteroscedasticity exists in the data. In this case, the standard errors shown in the regression output table may be unreliable.

There are a few common solutions to this problem, including:

1. Modify the response variable.

You can try performing a transformation on the response variable, such as taking the log, square root, or cube root. Typically, this can result in the disappearance of heteroscedasticity.

2. Make use of weighted regression.

Weighted regression gives each data point a weight based on the variance of its fitted value.

Essentially, this assigns small weights to data points with higher variances, resulting in smaller squared residuals.

When we use the appropriate weights, the problem of heteroscedasticity can be eliminated. In the same way, when identifying issues in heteroscedasticity in data, the above two methods are generally used.

Subscribe to our newsletter!