The Story of P-Hacking

The Story of P-Hacking, Imagine you’re on a treasure hunt, searching for clues that prove your hypothesis is correct.

You try many different paths, tweak your map, and test various routes, hoping to find that one shiny gem—your statistically significant result.

But what if some of those clues are just shiny illusions, tricks of chance rather than real discoveries?

Welcome to the world of p-hacking.

What Is P-Hacking, and Why Should You Care? [The Story of P-Hacking]

In the realm of scientific inquiry, researchers rely heavily on a statistical measure called the p-value to determine if their findings are genuine or just flukes.

A p-value below 0.05 usually signals “statistical significance,” suggesting the observed effect isn’t likely due to random noise.

However, when scientists — intentionally or not — chase that elusive threshold by testing multiple hypotheses or slicing data in various ways, they risk falling into the trap of p-hacking.

P-hacking is the practice of manipulating data analysis until a desired, statistically significant result appears.

It’s like adjusting the lens until the image looks perfect, even if the actual scene doesn’t support it.

This practice inflates the chances of false positives—”discoveries” that seem real but are just lucky coincidences.

The Story of P-Hacking-How Does P-Hacking Occur in Practice?

P-hacking isn’t always malicious; sometimes, it’s born out of curiosity, pressure to publish, or a lack of awareness about statistical pitfalls.

Here are common ways it manifests:

- Selective Reporting: Conducting multiple tests and only sharing the ones that yield p < 0.05.

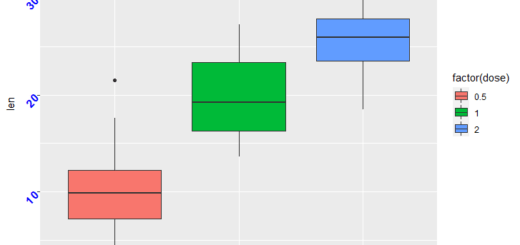

- Data Dredging: Collecting initial results, then adding more data or participants until the results look “significant.”

- Changing Definitions: Switching how variables are measured or categorized until a significant relationship emerges.

- Data Tweaking: Removing outliers or focusing on specific subgroups post-hoc to achieve significance.

All these tactics can distort the true story behind the data, leading to results that can’t be reliably reproduced elsewhere.

Why Is This a Big Deal?

Science builds on trust. When findings can’t be replicated, it erodes confidence in research.

P-hacking creates a mirage of discoveries—findings that seem solid but are actually statistical artifacts.

This not only wastes resources but can also mislead policymakers, clinicians, and the public.

Most p-hacking isn’t driven by bad intentions.

Often, researchers are simply trying to explore interesting patterns or respond to the publishing system that favors novel, significant results.

But regardless of intent, the consequences are the same: distorted science.

The Power of Flexibility: Researchers’ Degrees of Freedom

In their influential 2011 paper, Simmons, Nelson, and Simonsohn introduced the idea of researcher degrees of freedom—the many choices scientists make during data analysis.

When these choices are made after seeing the data, they can artificially inflate the likelihood of false positives.

Think of it as a game of “choose your own adventure,” where each decision—when to stop collecting data, how to handle outliers, which variables to include—can sway the final story.

The problem arises when researchers don’t pre-register their plans, leaving room for flexible decisions that unintentionally lead to misleading results.

A Simple Simulation: Seeing P-Hacking in Action

To illustrate this, let’s consider a Python experiment.

Imagine two groups of data, both drawn from the same distribution—no real difference exists.

But if we run many tests, slicing and dicing the data in various ways, we might find some tests that show a significant difference purely by chance.

Here’s a simplified code snippet:

import numpy as np

import scipy.stats as stats

import random

np.random.seed(415)

# Generate two similar groups

group_a = np.random.normal(50, 10, 100)

group_b = np.random.normal(50, 10, 100)

significant_count = 0

tests_run = 1000

for _ in range(tests_run):

# Randomly sample subsets

slice_a = random.sample(list(group_a), k=random.randint(20, 100))

slice_b = random.sample(list(group_b), k=random.randint(20, 100))

# Run t-test

t_stat, p = stats.ttest_ind(slice_a, slice_b)

if p < 0.05:

significant_count += 1

print(f"Out of {tests_run} tests, {significant_count} were significant.")

print(f"False positive rate: {significant_count / tests_run * 100:.2f}%")Even though there’s no real difference between the groups, running multiple tests increases the chance of finding a “significant” result somewhere by pure luck.

This demonstrates how p-hacking can lead researchers astray.

How Do We Protect Against P-Hacking?

The key is to adopt rigorous practices:

- Pre-Registration: Define your hypotheses and analysis plan before looking at data.

- Limit Flexibility: Stick to your original analysis pipeline.

- Adjust for Multiple Testing: Use corrections like Bonferroni to account for multiple comparisons.

- Transparency: Fully report all tests conducted, including those that didn’t yield significant results.

- Replication: Confirm findings through independent studies.

Final Thoughts: Cultivating Honest Science

P-hacking isn’t just a technical flaw; it’s a challenge to the integrity of scientific discovery.

By understanding how flexible analysis choices can mislead, researchers can adopt better practices that promote genuine, reproducible insights.

Remember: science isn’t about cherry-picking significant results. It’s about honest exploration—acknowledging uncertainty, rigorously testing hypotheses, and building knowledge that stands the test of time.

So next time you see a striking “p < 0.05” in a paper, ask yourself: was this a genuine discovery or a product of clever slicing and dicing?

The answer might just shape the future of trustworthy science.

Let me know if you’d like me to tailor it further or focus on specific aspects!

How to Choose the Right statistics Analysis » FINNSTATS