Stepwise Selection in Regression Analysis with R

Stepwise Selection in Regression Analysis with R, the regression model is a powerful tool that helps us understand relationships between a response variable and various predictor variables.

One of the notable techniques for building regression models is stepwise selection. This method systematically adds or removes predictor variables, aiming to identify those that significantly influence the response variable.

Stepwise Selection in Regression Analysis with R

In this article, we will delve into the backward selection method of stepwise selection, demonstrating how it works with the built-in mtcars dataset in R.

What is Stepwise Selection?

Stepwise selection is a systematic approach to constructing a regression model. The primary goal is to include all predictor variables that have a statistically significant relationship with the response variable while excluding those that do not.

Key Steps in Backward Selection

Backward selection starts with a model that includes all potential predictor variables and removes them one at a time based on specific criteria. Here’s a brief overview of the process:

- Fit an Initial Model: Start by fitting a regression model that includes all potential predictors.

- Evaluate Model Quality: Calculate a quality metric, such as the Akaike Information Criterion (AIC), to assess the model’s performance.

- Remove Predictors: Systematically eliminate the predictor that results in the greatest reduction in AIC while ensuring the reduction is statistically significant.

- Repeat: Continue this process until no further significant improvements in AIC can be made.

How to Perform Backward Selection in R: A Practical Example

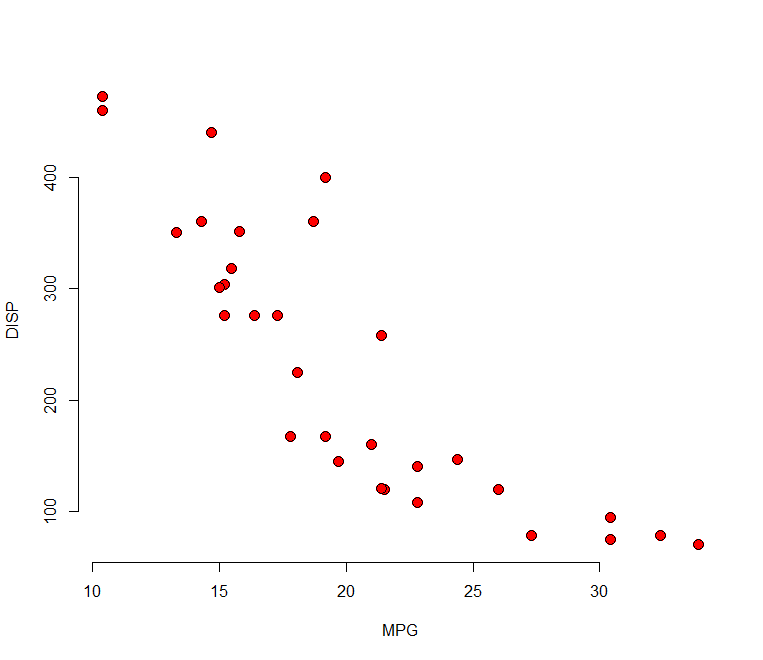

Using the mtcars dataset, we will demonstrate backward selection in R. The dataset contains several attributes of different car models, and we will use mpg (miles per gallon) as our response variable while considering the other variables as potential predictors.

Step-by-Step Implementation

- Load the Data: Start by loading the mtcars dataset and viewing its structure.

# View first six rows of mtcars

head(mtcars)- Define the Models: Create the intercept-only model and the full model using all predictors.

# Define intercept-only model

intercept_only <- lm(mpg ~ 1, data = mtcars)

# Define model with all predictors

all <- lm(mpg ~ ., data = mtcars)- Perform Backward Stepwise Regression: Use the

stepfunction, specifying the direction as backward.

# Perform backward stepwise regression

backward <- step(all, direction = 'backward', scope = formula(all), trace = 0)- View Results: Assess the results of the backward selection process.

# View results of backward stepwise regression

backward$anova- Final Model Summary: Obtain the coefficients of the final model after the selection process.

# View final model

backward$coefficientsInterpretation of the Results

After running the backward selection procedure, we observe the following:

- The initial model included all 10 predictors, and we calculated its AIC.

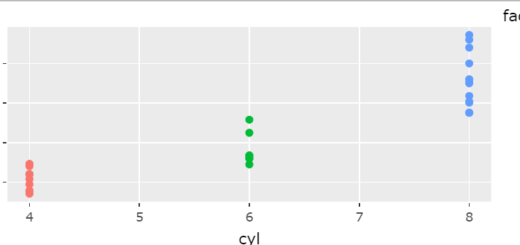

- The first variable removed was cyl because its removal resulted in the largest AIC reduction.

- Subsequent variables were eliminated one by one (e.g., vs, carb, gear, etc.) based on their contributions to AIC.

- This process continued until no further reductions could be achieved without compromising model significance.

The final regression model is expressed as:

mpg=9.62−3.92⋅wt+1.23⋅qsec+2.94⋅am

A Note on AIC and Other Metrics

In our example, we used AIC as the benchmark for model selection. AIC quantifies the trade-off between the goodness of fit and model complexity, helping to prevent overfitting. The formula for AIC is:

AIC=2K−2ln(L)

Where:

- K represents the number of model parameters.

- (ln(L)) refers to the log-likelihood of the model, conveying how well the model explains the data.

While AIC is popular for model selection, other metrics like BIC, Cross-Validation Error, or Adjusted R² can also be utilized depending on the specific analysis needs.

Conclusion

Stepwise selection, particularly backward selection, is a powerful method for optimizing regression models.

By utilizing R’s statistical capabilities and the mtcars dataset, we have illustrated a systematic approach to selecting significant predictor variables that enhance model efficacy.

Whether you are a researcher or a data enthusiast, mastering these techniques will significantly bolster your analytical skills in regression modeling.

If you’re looking to implement backward selection in your analyses, R provides the tools you need to streamline this process effectively!