Shapley Values Role in Explainable AI

Shapley Values Role in Explainable AI, In the rapidly evolving landscape of machine learning and artificial intelligence, models are becoming increasingly powerful and complex.

While these advanced models can produce highly accurate predictions, their decision-making processes often remain opaque—raising critical questions about interpretability and trust.

To address this challenge, the concepts of Shapley values and explainable AI (XAI) have emerged as vital tools for understanding how input features influence model predictions.

This article explores the foundational principles of Shapley values, their extension into AI interpretability via SHAP, and how these approaches enhance transparency in predictive modeling.

The Essence of Shapley Values

When deploying predictive models—whether for credit scoring, medical diagnosis, or dynamic pricing—stakeholders often seek not just the prediction but also the rationale behind it.

Traditional models like linear regression offer straightforward interpretability, but more sophisticated algorithms such as random forests and neural networks tend to operate as “black boxes.”

Shapley values, rooted in cooperative game theory, present a principled solution to this interpretability dilemma.

Originally devised to fairly distribute payoffs among players in a cooperative setting, Shapley values have been adapted for statistics and machine learning to quantify each feature’s contribution to a prediction.

Intuitive Perspective:

Imagine each feature in a dataset as a player in a game collaborating to produce an outcome.

The Shapley value for a feature measures its average contribution to the prediction across all possible combinations of features.

It answers the question: How much does this feature add to the prediction, considering all the ways features can interact?

Mathematical Foundation:

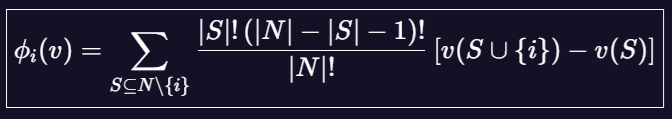

The formal formula for the Shapley value (\phi_i(v)) of the i-th feature is:

Here,

- (N) represents the set of all features,

- (S) is a subset of features excluding (i),

- (v(S)) is a value function indicating the model’s output when only features in (S) are known.

This formula computes the average marginal contribution of feature (i) over all possible feature subsets, ensuring fairness and consistency in attribution.

Properties of Shapley Values:

- Fairness: Credits are proportional to each feature’s true contribution.

- Consistency: If a feature becomes more influential, its Shapley value does not decrease.

- Completeness: The sum of all feature contributions equals the model’s output difference from a baseline.

From Shapley Values to Explainable AI (XAI)

As machine learning models grow more sophisticated, interpretability tools like Shapley values have become indispensable.

Enter SHAP (SHapley Additive exPlanations), an innovative framework that extends the concept of Shapley values into practical, scalable tools for model explanation.

What is SHAP?

SHAP translates the theoretical properties of Shapley values into algorithms capable of dissecting complex models—such as deep neural networks or ensemble methods—and visualizing how features influence predictions.

It provides both global insights (feature importance across the dataset) and local explanations (feature impact on individual predictions).

Practical Applications:

- Summary Plots: Visualize the overall importance of features in the dataset.

- Dependence Plots: Show how a feature’s value relates to its contribution, revealing nonlinear effects or interactions.

- Force Plots: Illustrate how features push a specific prediction higher or lower relative to a baseline.

Real-World Example:

Consider a model predicting the price of a mobile phone based on features like battery life, camera quality, and screen size. A SHAP summary plot might reveal that screen size and battery life are the most influential features globally.

Meanwhile, a SHAP dependence plot might show that higher battery life generally pushes prices upward, but only beyond a certain threshold, highlighting nonlinear relationships.

For a particular phone, a SHAP explanation could pinpoint that its low camera quality significantly reduces the predicted price, providing transparency and actionable insights.

The Impact of Shapley Values and SHAP in Modern AI

By integrating the fairness and robustness of Shapley values with advanced visualization and computation techniques, SHAP empowers data scientists and stakeholders to demystify even the most intricate models.

This transparency fosters trust, facilitates model validation, and ensures that AI decisions are justifiable—especially in high-stakes domains like healthcare, finance, and autonomous systems.

In Summary:

- Shapley values offer a principled method to attribute predictions to individual features, ensuring fair and consistent explanations.

- The evolution into SHAP provides scalable, practical tools for interpreting complex models at both global and local levels.

- Together, these methodologies enhance our understanding of AI systems, making them more transparent, trustworthy, and aligned with ethical standards.

As machine learning continues to advance, the synergy of theoretical rigor and interpretability tools like SHAP will remain essential in harnessing AI’s full potential responsibly and effectively.