Regression Analysis Example-Ultimate Guide

Regression Analysis Example, In a recent article, we discussed model fitting and selection. However, we haven’t considered how we’ll choose which variables to include in our model.

Simple Linear Regression in r » Guide »

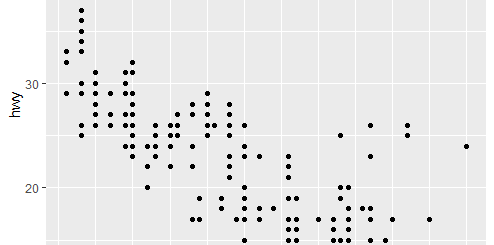

Let’s go over our linear regression model for the mtcars data.frame again.

Regression Analysis Example in R

mtcars.lm <- lm(mpg ~ disp, data=mtcars) summary(mtcars.lm)

Call:

lm(formula = mpg ~ disp, data = mtcars)

Residuals:

Min 1Q Median 3Q Max

-4.8922 -2.2022 -0.9631 1.6272 7.2305

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 29.599855 1.229720 24.070 < 2e-16 ***

disp -0.041215 0.004712 -8.747 9.38e-10 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 3.251 on 30 degrees of freedom

Multiple R-squared: 0.7183, Adjusted R-squared: 0.709

F-statistic: 76.51 on 1 and 30 DF, p-value: 9.38e-10We can observe that disp is a very significant predictor of mpg based on its associated p-value of 9.38e-10. But what exactly do AIC and BIC tell us?

Model Selection in R (AIC Vs BIC) »

broom::glance(lm(mpg ~ cyl + disp + hp + drat + wt + qsec + vs + am + gear + carb, data=mtcars))

r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC deviance df.residual nobs

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <int> <int>

1 0.869 0.807 2.65 13.9 0.000000379 10 -69.9 164. 181. 147. 21 32Sure, we can see that AIC = 164 and BIC = 181, but those figures don’t tell us whether disp is a good or bad predictor of mpg.

It’s also unclear which parameter is important. Stepwise regression is one solution to this problem.

1. Stepwise forward regression in R

We can start with a basic model in forward selection.

How to Identify Outliers-Grubbs’ Test in R »

lm<- lm(mpg ~ 1, data=mtcars) summary(lm)

Call:

lm(formula = mpg ~ 1, data = mtcars)

Residuals:

Min 1Q Median 3Q Max

-9.6906 -4.6656 -0.8906 2.7094 13.8094

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 20.091 1.065 18.86 <2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 6.027 on 31 degrees of freedomNow we can calculate AIC (or BIC) for this model.

Log Rank Test in R-Survival Curve Comparison »

AIC(lm) [1] 208.7555

BIC(lm) [1] 211.687

We want to know if adding parameters will improve our model’s explanatory power without introducing undue complexity.

In this dataset consider all variables and choose the model that reduces AIC the most.

We repeat this approach until we run out of variables to add to our model or until no variable with a lower AIC is available to choose from.

We can automate this procedure using the stats package’s step() function.

step(lm, scope=list(upper=lm(mpg ~ ., data=mtcars)), direction="forward")

Start: AIC=115.94

mpg ~ 1

Df Sum of Sq RSS AIC

+ wt 1 847.73 278.32 73.217

+ cyl 1 817.71 308.33 76.494

+ disp 1 808.89 317.16 77.397

+ hp 1 678.37 447.67 88.427

+ drat 1 522.48 603.57 97.988

+ vs 1 496.53 629.52 99.335

+ am 1 405.15 720.90 103.672

+ carb 1 341.78 784.27 106.369

+ gear 1 259.75 866.30 109.552

+ qsec 1 197.39 928.66 111.776

<none> 1126.05 115.943

Step: AIC=73.22

mpg ~ wt

Df Sum of Sq RSS AIC

+ cyl 1 87.150 191.17 63.198

+ hp 1 83.274 195.05 63.840

+ qsec 1 82.858 195.46 63.908

+ vs 1 54.228 224.09 68.283

+ carb 1 44.602 233.72 69.628

+ disp 1 31.639 246.68 71.356

<none> 278.32 73.217

+ drat 1 9.081 269.24 74.156

+ gear 1 1.137 277.19 75.086

+ am 1 0.002 278.32 75.217

Step: AIC=63.2

mpg ~ wt + cyl

Df Sum of Sq RSS AIC

+ hp 1 14.5514 176.62 62.665

+ carb 1 13.7724 177.40 62.805

<none> 191.17 63.198

+ qsec 1 10.5674 180.60 63.378

+ gear 1 3.0281 188.14 64.687

+ disp 1 2.6796 188.49 64.746

+ vs 1 0.7059 190.47 65.080

+ am 1 0.1249 191.05 65.177

+ drat 1 0.0010 191.17 65.198

Step: AIC=62.66

mpg ~ wt + cyl + hp

Df Sum of Sq RSS AIC

<none> 176.62 62.665

+ am 1 6.6228 170.00 63.442

+ disp 1 6.1762 170.44 63.526

+ carb 1 2.5187 174.10 64.205

+ drat 1 2.2453 174.38 64.255

+ qsec 1 1.4010 175.22 64.410

+ gear 1 0.8558 175.76 64.509

+ vs 1 0.0599 176.56 64.654

Call:

lm(formula = mpg ~ wt + cyl + hp, data = mtcars)

Coefficients:

(Intercept) wt cyl hp

38.75179 -3.16697 -0.94162 -0.01804 We can see step() has been calculated for our basic model, as well as AIC for each variable we may add to our model, in the first stage of the process.

Wt has the lowest AIC (73.217), hence it is included in our model. The variables cyl and hp are added in subsequent steps of the procedure.

The model now has mpg wt + cyl + hp and is mpg wt + cyl + hp. We can see that adding more variables will raise the AIC, so we’ll stop here and use this as our final model.

How to Make Boxplot in R-Quick Start Guide »

Please note, the AIC values produced with step() differ from those calculated with glance() or AIC (). This is inconvenient, although the values only differ by a small amount. However, inference won’t change much.

2. Stepwise backward elimination in R

In backward elimination, we can start with a comprehensive model.

lmfull <- lm(mpg ~ ., data=mtcars)

summary(lmfull)

Call:

lm(formula = mpg ~ ., data = mtcars)

Residuals:

Min 1Q Median 3Q Max

-3.4506 -1.6044 -0.1196 1.2193 4.6271

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 12.30337 18.71788 0.657 0.5181

cyl -0.11144 1.04502 -0.107 0.9161

disp 0.01334 0.01786 0.747 0.4635

hp -0.02148 0.02177 -0.987 0.3350

drat 0.78711 1.63537 0.481 0.6353

wt -3.71530 1.89441 -1.961 0.0633 .

qsec 0.82104 0.73084 1.123 0.2739

vs 0.31776 2.10451 0.151 0.8814

am 2.52023 2.05665 1.225 0.2340

gear 0.65541 1.49326 0.439 0.6652

carb -0.19942 0.82875 -0.241 0.8122

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 2.65 on 21 degrees of freedom

Multiple R-squared: 0.869, Adjusted R-squared: 0.8066

F-statistic: 13.93 on 10 and 21 DF, p-value: 3.793e-07AIC(lmfull) [1] 163.7098

BIC(lmfull) [1] 181.2986

Here we want to see if deleting factors will reduce the complexity of our model without reducing its explanatory power.

We repeat this approach until we run out of variables to remove from our model or there are no variables left to remove that reduce AIC.

aggregate Function in R- A powerful tool for data frames »

step(lmfull, scope=list(lower=lm), direction="backward")

Start: AIC=70.9

mpg ~ cyl + disp + hp + drat + wt + qsec + vs + am + gear + carb

Df Sum of Sq RSS AIC

- cyl 1 0.0799 147.57 68.915

- vs 1 0.1601 147.66 68.932

- carb 1 0.4067 147.90 68.986

- gear 1 1.3531 148.85 69.190

- drat 1 1.6270 149.12 69.249

- disp 1 3.9167 151.41 69.736

- hp 1 6.8399 154.33 70.348

- qsec 1 8.8641 156.36 70.765

<none> 147.49 70.898

- am 1 10.5467 158.04 71.108

- wt 1 27.0144 174.51 74.280

Step: AIC=68.92

mpg ~ disp + hp + drat + wt + qsec + vs + am + gear + carb

Df Sum of Sq RSS AIC

- vs 1 0.2685 147.84 66.973

- carb 1 0.5201 148.09 67.028

- gear 1 1.8211 149.40 67.308

- drat 1 1.9826 149.56 67.342

- disp 1 3.9009 151.47 67.750

- hp 1 7.3632 154.94 68.473

<none> 147.57 68.915

- qsec 1 10.0933 157.67 69.032

- am 1 11.8359 159.41 69.384

- wt 1 27.0280 174.60 72.297

Step: AIC=66.97

mpg ~ disp + hp + drat + wt + qsec + am + gear + carb

Df Sum of Sq RSS AIC

- carb 1 0.6855 148.53 65.121

- gear 1 2.1437 149.99 65.434

- drat 1 2.2139 150.06 65.449

- disp 1 3.6467 151.49 65.753

- hp 1 7.1060 154.95 66.475

<none> 147.84 66.973

- am 1 11.5694 159.41 67.384

- qsec 1 15.6830 163.53 68.200

- wt 1 27.3799 175.22 70.410

Step: AIC=65.12

mpg ~ disp + hp + drat + wt + qsec + am + gear

Df Sum of Sq RSS AIC

- gear 1 1.565 150.09 63.457

- drat 1 1.932 150.46 63.535

<none> 148.53 65.121

- disp 1 10.110 158.64 65.229

- am 1 12.323 160.85 65.672

- hp 1 14.826 163.35 66.166

- qsec 1 26.408 174.94 68.358

- wt 1 69.127 217.66 75.350

Step: AIC=63.46

mpg ~ disp + hp + drat + wt + qsec + am

Df Sum of Sq RSS AIC

- drat 1 3.345 153.44 62.162

- disp 1 8.545 158.64 63.229

<none> 150.09 63.457

- hp 1 13.285 163.38 64.171

- am 1 20.036 170.13 65.466

- qsec 1 25.574 175.67 66.491

- wt 1 67.572 217.66 73.351

Step: AIC=62.16

mpg ~ disp + hp + wt + qsec + am

Df Sum of Sq RSS AIC

- disp 1 6.629 160.07 61.515

<none> 153.44 62.162

- hp 1 12.572 166.01 62.682

- qsec 1 26.470 179.91 65.255

- am 1 32.198 185.63 66.258

- wt 1 69.043 222.48 72.051

Step: AIC=61.52

mpg ~ hp + wt + qsec + am

Df Sum of Sq RSS AIC

- hp 1 9.219 169.29 61.307

<none> 160.07 61.515

- qsec 1 20.225 180.29 63.323

- am 1 25.993 186.06 64.331

- wt 1 78.494 238.56 72.284

Step: AIC=61.31

mpg ~ wt + qsec + am

Df Sum of Sq RSS AIC

<none> 169.29 61.307

- am 1 26.178 195.46 63.908

- qsec 1 109.034 278.32 75.217

- wt 1 183.347 352.63 82.790

Call:

lm(formula = mpg ~ wt + qsec + am, data = mtcars)

Coefficients:

(Intercept) wt qsec am

9.618 -3.917 1.226 2.936 We begin with a full regression model (AIC = 70.9) and end with mpg wt + qsec + am (AIC = 61.31). Did you notice in the backward selection we got a different model!.

3. Bidirectional Elimination in R

Assume we already have a model.

lm.mtcars <- lm(mpg ~ disp + cyl + qsec, data=mtcars) summary(lm.mtcars)

We wish to reduce the AIC by adding or removing variables from this model. We may create a new algorithm called bidirectional elimination by combining forward selection and backward elimination.

We will either add a new variable to our model or delete an existing variable from our model at each stage of this new method, taking the action that results in the greatest reduction in AIC.

Radar Chart in R with ggradar » Beautiful Radar Chart in R »

Call:

lm(formula = mpg ~ disp + cyl + qsec, data = mtcars)

Residuals:

Min 1Q Median 3Q Max

-4.6040 -1.8180 -0.5852 1.4493 7.2779

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 40.17553 9.48449 4.236 0.000223 ***

disp -0.01871 0.01082 -1.729 0.094870 .

cyl -1.84802 0.83926 -2.202 0.036071 *

qsec -0.24276 0.40183 -0.604 0.550625

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 3.089 on 28 degrees of freedom

Multiple R-squared: 0.7627, Adjusted R-squared: 0.7372

F-statistic: 29.99 on 3 and 28 DF, p-value: 6.882e-09We add a new variable, wt, to our model in the first step of our bidirectional elimination process.

step(lm.mtcars, scope=list(upper=lmfull,lower=lm), direction="both")

Start: AIC=75.92

mpg ~ disp + cyl + qsec

Df Sum of Sq RSS AIC

+ wt 1 91.588 175.67 64.492

+ carb 1 52.250 215.01 70.958

- qsec 1 3.484 270.74 74.334

+ hp 1 24.724 242.53 74.813

+ am 1 20.026 247.23 75.427

<none> 267.26 75.919

- disp 1 28.525 295.78 77.164

+ drat 1 3.167 264.09 77.538

+ gear 1 1.083 266.17 77.789

+ vs 1 0.000 267.26 77.919

- cyl 1 46.280 313.54 79.030

Step: AIC=64.49

mpg ~ disp + cyl + qsec + wt

Df Sum of Sq RSS AIC

- disp 1 4.936 180.60 63.378

+ am 1 14.254 161.41 63.784

<none> 175.67 64.492

- qsec 1 12.824 188.49 64.746

+ hp 1 6.983 168.69 65.194

+ drat 1 3.448 172.22 65.857

- cyl 1 19.794 195.46 65.908

+ gear 1 1.369 174.30 66.241

+ vs 1 1.259 174.41 66.261

+ carb 1 1.123 174.54 66.286

- wt 1 91.588 267.26 75.919

Step: AIC=63.38

mpg ~ cyl + qsec + wt

Df Sum of Sq RSS AIC

+ am 1 12.820 167.78 63.022

- qsec 1 10.567 191.17 63.198

<none> 180.60 63.378

- cyl 1 14.859 195.46 63.908

+ hp 1 5.385 175.22 64.410

+ disp 1 4.936 175.67 64.492

+ carb 1 4.552 176.05 64.562

+ drat 1 2.669 177.94 64.902

+ vs 1 1.245 179.36 65.157

+ gear 1 0.331 180.27 65.320

- wt 1 115.177 295.78 77.164

Step: AIC=63.02

mpg ~ cyl + qsec + wt + am

Df Sum of Sq RSS AIC

- cyl 1 1.501 169.29 61.307

<none> 167.78 63.022

+ carb 1 9.042 158.74 63.250

- am 1 12.820 180.60 63.378

+ hp 1 7.967 159.82 63.465

+ disp 1 6.371 161.41 63.784

+ gear 1 0.808 166.98 64.868

+ drat 1 0.603 167.18 64.907

+ vs 1 0.268 167.52 64.971

- qsec 1 23.262 191.05 65.177

- wt 1 99.706 267.49 75.947

Step: AIC=61.31

mpg ~ qsec + wt + am

Df Sum of Sq RSS AIC

<none> 169.29 61.307

+ hp 1 9.219 160.07 61.515

+ carb 1 8.036 161.25 61.751

+ disp 1 3.276 166.01 62.682

+ cyl 1 1.501 167.78 63.022

+ drat 1 1.400 167.89 63.042

+ gear 1 0.123 169.16 63.284

+ vs 1 0.000 169.29 63.307

- am 1 26.178 195.46 63.908

- qsec 1 109.034 278.32 75.217

- wt 1 183.347 352.63 82.790

Call:

lm(formula = mpg ~ qsec + wt + am, data = mtcars)

Coefficients:

(Intercept) qsec wt am

9.618 1.226 -3.917 2.936 We eliminate a variable, disp, in the second step. We eventually come to a halt at the model mpg~qsec + wt + am, since there is no variable that can be added or removed to reduce AIC.

Conclusion

In conclusion, you now have three alternative stepwise regression methods to choose from, each of which can produce different results.

How do you decide which to use?

The answer is simple, It’s entirely up to you!. It comes down to personal preference and the problem you’re trying to solve.

Sentiment analysis in R » Complete Tutorial »

![Error in FUN(X[[i]] …) object X not found](https://finnstats.com/wp-content/themes/hueman/assets/front/img/thumb-medium-empty.png)