Intraclass Correlation Coefficient in R-Quick Guide

Intraclass Correlation Coefficient in R, ICC is used to determine if subjects can be rated reliably by different raters.

In some kind of situation or studies with two or more raters or judges, Intraclass Correlation Coefficient can be also used for test-retest (repeated measures of the same subject) and intra-rater (multiple scores from the same raters) reliability analysis.

ICC determines the reliability of scores through evaluating the range of various scores of the equal individual to the whole version throughout all scores and all individuals.

Equality of Variances in R-Homogeneity test-Quick Guide »

ICC (close to 1) indicates high similarity between rater’s scores.

ICC (close to 0) indicates rater’s scores are not similar.

There are different forms of ICC that can provide different results for the same set of data.

The forms of ICC can be defined based on the model, unit, and Consistency.

One-way random-effects model

In this case, each subject is rated by a different set of randomly chosen raters. Here rater’s considered as the random effects.

Kruskal Wallis test in R-One-way ANOVA Alternative »

Two-way random-effects model

In this case, k-raters are randomly selected and each subject is measured by the same set of k-raters with similar characteristics. In this model, both subjects and raters are considered as random effects.

Two-way mixed-effects model

The two-way mixed-effects model is a less commonly used method in ICC. In this case, the raters are considered as a fixed effects. This model is applicable only if the selected raters are the only raters of interest. They cannot be generalized to other raters even if those raters have the same characteristics as the selected raters in the reliability experiment.

Unit of ratings

The above-mentioned models, reliability can be estimated based on a single rating or for the average of k ratings.

datatable editor-DT package in R » Shiny, R Markdown & R

Consistency-

One-way model: – Based on an absolute agreement.

Two-way models: –

- The consistency when systematic differences between raters are irrelevant.

- The absolute agreement, when systematic differences between raters are relevant.

ICC Interpretation Guide

The value of an ICC lies between 0 to 1, with 0 indicating no reliability among raters and 1 indicating perfect reliability.

An intraclass correlation coefficient, according to Koo & Li:

- Less than 0.50: Poor reliability

- Between 0.5 and 0.75: Moderate reliability

- Between 0.75 and 0.9: Good reliability

- Greater than 0.9: Excellent reliability

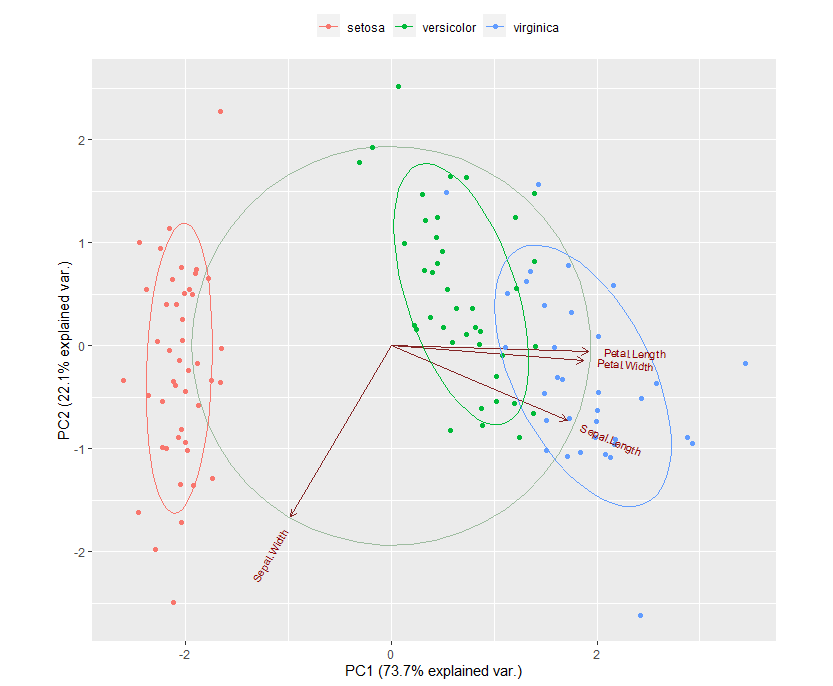

Intraclass Correlation Coefficient in R

One of the easiest ways to calculate Intraclass Correlation Coefficient in R based on ICC() function from the IRR package

Syntax:-

icc(ratings, model, type, unit)

Sentiment analysis in R » Complete Tutorial »

Where:

ratings: data frame or matrix of ratings

model: “oneway” or “two-way”

type: “consistency” or “agreement”

unit: “single” or “average”

Load Data

Let’s create a sample data for ICC calculation,

data <- data.frame(R1=c(1, 1, 3, 6, 5, 7, 8, 9, 8, 9), R2=c(2, 3, 7, 4, 5, 5, 7, 9, 8, 8), R3=c(0, 4, 1, 4, 5, 6, 6, 9, 8, 8), R4=c(1, 2, 3, 3, 6, 4, 6, 8, 8, 9))

Approach1:-

library(psych) ICC(data)

Call: ICC(x = data)

Class Imbalance-Handling Imbalanced Data in R »

Intraclass correlation coefficients type ICC F df1 df2 p lower bound upper bound Single_raters_absolute ICC1 0.83 20 9 30 2.0e-10 0.67 0.93 Single_random_raters ICC2 0.83 21 9 27 6.5e-10 0.67 0.93 Single_fixed_raters ICC3 0.83 21 9 27 6.5e-10 0.67 0.94 Average_raters_absolute ICC1k 0.95 20 9 30 2.0e-10 0.89 0.98 Average_random_raters ICC2k 0.95 21 9 27 6.5e-10 0.89 0.98 Average_fixed_raters ICC3k 0.95 21 9 27 6.5e-10 0.89 0.98 Number of subjects = 10 Number of Judges = 4

Approach 2:-

library("irr")

icc(data, model = "twoway",

type = "agreement", unit = "single")Single Score Intraclass Correlation

Model: two-way Type : agreement Subjects = 1 Raters = 4 ICC(A,1) = 0.828 F-Test, H0: r0 = 0 ; H1: r0 > 0 F(9,29.9) = 20.7 , p = 1.56e-10 95%-Confidence Interval for ICC Population Values: 0.632 < ICC < 0.947

The intraclass correlation coefficient (ICC) is 0.828.

Conclusion

Good absolute agreement between the raters was observed while using the two-way random effect models and single rater with a p-value of 1.56e-10.

Random Forest Feature Selection » Boruta Algorithm » finnstats

Subscribe to the Newsletter and COMMENT below!

[newsletter_form type=”minimal”]