Healthcare Fraud Detection class imbalance visualization

Healthcare Fraud Detection class imbalance visualization, Detecting healthcare fraud poses unique challenges, particularly related to data analysis.

When dealing with claims data, it’s essential to connect individual transactions with provider-level evaluations.

Healthcare Fraud Detection class imbalance visualization

In this article, we will guide you through the process of aggregating claims data to derive valuable provider features, utilizing Yellowbrick’s Parallel Coordinates for visualizing patterns, and exploring other effective visualization tools for feature analysis.

We will illustrate how a combination of robust data integration and visual analytics can uncover patterns that differentiate fraudulent from legitimate healthcare providers.

Introduction: Accessing Healthcare Provider Fraud Detection Data

Before we delve into the data integration process, we recommend reading our article on How to Visualize Class Imbalance with Yellowbrick.

This article provides an introduction to our Healthcare Provider Fraud Detection dataset, which includes an in-depth analysis of class imbalance visualization.

Understanding the Data Integration Challenge

Our dataset presents intriguing structural elements:

- Training Dataset (5,410 rows): Contains provider IDs and fraud labels.

- Training Inpatient Dataset (40,474 rows): Contains hospital admission claims.

- Training Outpatient Dataset (517,737 rows): Contains outpatient visit claims.

The target variable indicating fraud exists at the provider level, while essential information is often dispersed across individual claims in two different tables.

This discrepancy between the unit of analysis (providers) and the granularity of the data (individual claims) necessitates careful aggregation.

Creating Provider-Level Features

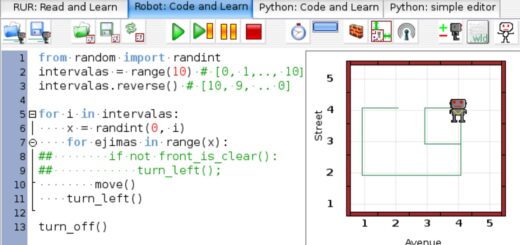

Let’s start by aggregating claims amounts at the provider level using Python:

import pandas as pd

# Load datasets

train = pd.read_csv('Train-1542865627584.csv') # Provider fraud labels

inpat = pd.read_csv('Train_Inpatientdata-1542865627584.csv') # Hospital admissions

outpat = pd.read_csv('Train_Outpatientdata-1542865627584.csv') # Outpatient visits

# Calculate provider-level inpatient claims totals

ip_provider_amounts = inpat.groupby('Provider')['InscClaimAmtReimbursed'].sum().reset_index()

ip_provider_amounts = ip_provider_amounts.rename(columns={'InscClaimAmtReimbursed': 'IP_Claims_Total'})

# Calculate provider-level outpatient claims totals

op_provider_amounts = outpat.groupby('Provider')['InscClaimAmtReimbursed'].sum().reset_index()

op_provider_amounts = op_provider_amounts.rename(columns={'InscClaimAmtReimbursed': 'OP_Claims_Total'})In this initial step, we aggregate the total claims for each provider, distinguishing between inpatient and outpatient claims.

It is important to note that not all providers may have both types of claims; some may handle only outpatient cases, while others may strictly manage inpatient admissions.

Next, we merge the inpatient and outpatient totals:

# Merge IP and OP provider totals

provider_claims = pd.merge(

ip_provider_amounts,

op_provider_amounts,

on='Provider',

how='outer'

).fillna(0) # Fill NaN values with 0 for providers with only one type of claims

# Merge with fraud labels

final_df = pd.merge(

provider_claims,

train[['Provider', 'PotentialFraud']],

on='Provider',

how='outer'

)

print(final_df)Examining the Aggregated Dataset

The integrated dataset provides valuable insights into each provider’s total claims:

Provider IP_Claims_Total OP_Claims_Total PotentialFraud

0 PRV51001 97000.0 7640.0 No

1 PRV51003 573000.0 32670.0 Yes

2 PRV51007 19000.0 14710.0 No

3 PRV51008 25000.0 10630.0 No

4 PRV51011 5000.0 11630.0 NoFrom this integrated dataset, we can observe the financial scope of claims for each provider in both inpatient and outpatient categories.

Notably, PRV51003, marked for potential fraud, shows exceptionally high claim amounts across both categories.

This outer merge fulfills two critical functions:

- It retains all providers, including those with claims in only one category.

- It replaces missing values with zero claim amounts, enhancing data suitability for analysis.

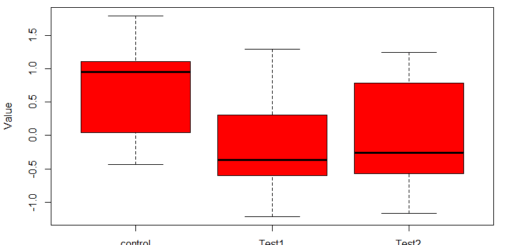

Identifying Feature Patterns Using Parallel Coordinates

The Parallel Coordinates plot is a potent visualization tool for uncovering relationships between features and the target variable in multivariate datasets.

Each vertical line represents a feature, while horizontal connections illustrate how feature values transition for individual data points.

We will employ this visualization to examine how inpatient and outpatient claims totals differ between fraudulent and legitimate providers.

Here’s how to create this visualization using Yellowbrick:

from yellowbrick.features import ParallelCoordinates

from sklearn.preprocessing import StandardScaler

# Prepare features and target variables

X = final_df[['IP_Claims_Total', 'OP_Claims_Total']]

y = final_df['PotentialFraud']

# Scale the features

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

X_scaled = pd.DataFrame(X_scaled, columns=X.columns)

# Create the Parallel Coordinates visualization

visualizer_parallel = ParallelCoordinates()

visualizer_parallel.fit_transform(X_scaled, y)

visualizer_parallel.show()Analyzing the Visualization

The resulting visualization yields several critical observations:

- Pattern Separation: The green lines (fraudulent providers) exhibit more extreme patterns, particularly in higher ranges of both inpatient and outpatient claims, indicating a trend among fraudulent providers toward unusual claim amounts.

- Line Slopes: Many green lines display steep slopes between IP and OP claims totals, suggesting that fraudulent providers often engage in various activities across multiple claim types to maximize reimbursement.

- Density Distribution: Blue lines (non-fraudulent providers) are concentrated in lower ranges for both features, revealing more consistent and moderate claiming patterns, which establish a baseline of normal behavior for further analysis.

- Feature Scaling: Standardization of features prior to visualization (using StandardScaler) enables clearer pattern identification by placing both claim types on the same scale.

This visualization reinforces our earlier data exploration, highlighting that fraudulent behavior typically manifests as unusual claiming patterns across service types.

The Parallel Coordinates plot further elucidates how these patterns interrelate across features.

Leveraging Yellowbrick’s Feature Analysis Tools

While our focus has been on Parallel Coordinates, Yellowbrick provides a comprehensive suite of feature analysis tools, each serving distinct analytical purposes that integrate seamlessly with scikit-learn pipelines:

- Rank Features (Rank1D and Rank2D): Evaluate feature importance using correlation and covariance metrics.

- PCA Projection: Reduces high-dimensional data to 2D space, facilitating the understanding of complex datasets.

- Manifold Visualization: Maintains local relationships in data through sophisticated dimensionality reduction techniques.

- RadViz Visualizer: Displays features in a circular plot, revealing class separation patterns effectively.

- Direct Data Visualization (Jointplots): Combines scatter plots and distribution information to illustrate feature-target relationships.

Choosing the appropriate visualizer hinges on your analytical goals.

For instance, prioritize Rank Features for feature selection, utilize PCA or Manifold for dimensionality reduction, select RadViz or Parallel Coordinates to investigate class separations, and use Jointplots to explore continuous variable relationships.

Conclusion: Transforming Data into Insights

The integration of thoughtful data aggregation and visual analytics significantly enhances healthcare fraud detection efforts.

By consolidating claims data to the provider level and harnessing Yellowbrick’s visualization capabilities, we can uncover patterns indicative of fraudulent behavior.

While we highlighted Parallel Coordinates in this analysis, Yellowbrick’s diverse suite of tools provides multiple perspectives for understanding feature relationships.

The key to effective analysis lies in selecting the right visualization techniques tailored to specific analytical requirements, ultimately transforming complex data into actionable insights.