CatBoost in R for Efficient Machine Learning

CatBoost in R, is an advanced gradient boosting library that excels in handling categorical data natively, which sets it apart from other machine learning frameworks.

Its ability to reduce preprocessing times and prevent overfitting with innovative techniques makes it a standout choice for data professionals.

CatBoost in R

In this article, we will walk through the process of implementing CatBoost in R, covering everything from installation to model evaluation.

Key Features of CatBoost

CatBoost offers several notable features that make it an exceptional library for machine learning tasks:

1. Efficient and Fast Training

CatBoost employs optimized algorithms to significantly speed up the training process. With support for parallel computation, it efficiently processes large datasets, making it ideal for industrial-scale machine learning applications.

2. Enhanced Accuracy with Ordered Boosting

The library utilizes a unique method called “ordered boosting,” which improves accuracy by avoiding the use of future data during gradient calculations. This technique helps prevent overfitting, leading to reliable and precise predictions.

3. Robust Against Overfitting

CatBoost integrates multiple strategies to mitigate overfitting, including L2 regularization and feature penalties. By leveraging ordered statistics, it further enhances model performance on unseen data, even when working with smaller datasets.

4. Automatic Feature Selection

One of CatBoost’s standout features is its ability to automatically identify and evaluate important features during training. This capability significantly reduces the need for manual feature engineering, allowing models to focus only on the most relevant variables.

Installing and Loading CatBoost

Before you start using CatBoost, you need to install the CatBoost package in R. You can easily install it directly from CRAN using the following command:

# Install the CatBoost package

install.packages("catboost")

# Load the library

library(catboost)Preparing the Data

CatBoost relies on a specialized data structure called a Pool to manage data, labels, and categorical features efficiently. Use the catboost.load_pool() function to prepare your data for model training. Here’s an example using the Iris dataset:

# Load the Iris dataset

data(iris)

# Convert Species to numeric for simplicity

iris$Species <- as.integer(iris$Species) - 1

# Split into training and testing sets

set.seed(123)

train_indices <- sample(1:nrow(iris), 0.8 * nrow(iris))

train_data <- iris[train_indices, ]

test_data <- iris[-train_indices, ]

# Define categorical features (if any)

cat_features <- which(sapply(train_data, is.factor))

# Create CatBoost Pool objects

train_pool <- catboost.load_pool(data = train_data[, -5], label = train_data$Species, cat_features = cat_features)

test_pool <- catboost.load_pool(data = test_data[, -5], label = test_data$Species)Training the CatBoost Model

To train a CatBoost model, you must define the hyperparameters that will govern the training process. Then, use the catboost.train() function to initiate training.

# Define model parameters

params <- list(

loss_function = "MultiClass",

iterations = 1000,

depth = 6,

learning_rate = 0.1,

verbose = 100

)

# Train the CatBoost model

cat_model <- catboost.train(train_pool, params = params)Key Hyperparameters Explained:

- loss_function: Specifies the optimization objective for the model.

- iterations: Defines the number of boosting rounds to perform.

- depth: Sets the maximum depth of the trees created during training.

- learning_rate: Controls the step size for each boosting iteration.

- verbose: Determines the frequency of training output updates.

Making Predictions

After training the model, you can use the catboost.predict() function to generate predictions. For classification tasks, predictions can be returned as probabilities or class labels.

# Predict class probabilities

pred_probs <- catboost.predict(cat_model, test_pool, prediction_type = "Probability")

# Predict class labels

pred_labels <- catboost.predict(cat_model, test_pool, prediction_type = "Class")Evaluating the Model

Assessing model performance is vital. You can use various metrics, including accuracy, precision, recall, or F1-score, to evaluate your model.

# Calculate accuracy

actual <- test_data$Species

predicted <- pred_labels

accuracy <- sum(actual == predicted) / length(actual)

print(paste("Accuracy:", accuracy))Understanding Feature Importance

CatBoost provides an easy way to analyze feature importance, helping you understand which variables contribute the most to your model’s predictions. Use the catboost.get_feature_importance() function to retrieve importance values.

# Get feature importance

feature_importance <- catboost.get_feature_importance(cat_model, pool = train_pool)

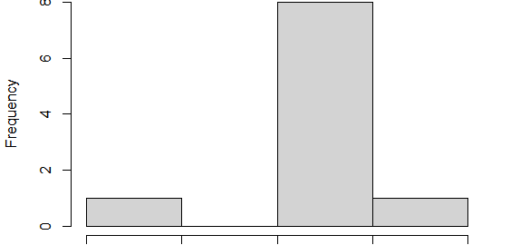

print(feature_importance)Hyperparameter Tuning

To enhance your model’s performance, you can adjust hyperparameters like learning rate, depth, and iterations. Consider performing a grid search or random search with cross-validation using the catboost.cv() function.

# Perform cross-validation

cv_params <- list(

loss_function = "MultiClass",

iterations = 100,

depth = 6,

learning_rate = 0.1

)

# Execute cross-validation

cv_result <- catboost.cv(train_pool, params = cv_params, fold_count = 5, plot = TRUE)Conclusion

CatBoost is a robust gradient boosting algorithm, particularly well-suited for managing categorical data with minimal preprocessing.

This article has guided you through the entire process of using CatBoost in R—from installation to model evaluation.

By harnessing the capabilities of CatBoost, you can achieve exceptional results in both classification and regression tasks.

Experimenting with different feature engineering techniques and tuning hyperparameters will further improve your model’s performance, allowing you to unlock its full potential in your machine learning projects.

- Logistic Regression in SPSS: A Comprehensive Guide

- Top 5 Books to learn Swift

- Recode Variables in SPSS a Comprehensive Guide

- Is it a good idea to do a master’s degree in statistics