Maximizing Model Accuracy with Train-Test Splits in Machine Learning

Maximizing Model Accuracy with Train-Test Splits, Machine learning models have revolutionized the way businesses and researchers solve complex problems, offering immense value through accurate predictions.

However, the true worth of a machine learning model lies in its ability to make precise predictions that align with real-world outcomes.

This accuracy can only be achieved through rigorous evaluation and testing, which is where the concept of train-test splits comes into play.

Maximizing Model Accuracy with Train-Test Splits

In supervised machine learning, models are trained using labeled datasets that guide the learning process.

During training, the model identifies patterns within the data and develops a generalized understanding that can be applied to new, unseen data.

But how do we ensure that the model’s predictions will be accurate when deployed in real-world scenarios? This is where train-test splits become crucial.

What Are Train-Test Splits?

A train-test split involves dividing the dataset into two distinct subsets: training data and testing data.

The training data is used to build the model, while the testing data is reserved for evaluating the model’s performance.

This separation helps in assessing the model’s ability to generalize and prevents it from merely memorizing patterns from the training set.

By leveraging train-test splits, we can obtain a realistic measure of how the model will perform on new, unseen data and identify potential issues before deployment.

Why Train-Test Splits Are Essential

Employing train-test splits is essential for several reasons:

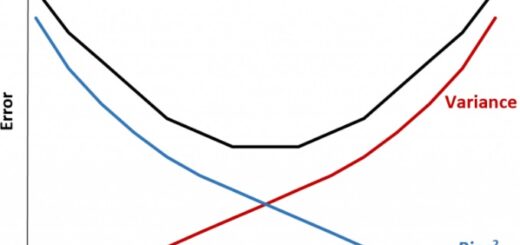

- Generalization: It ensures that the model generalizes well to new data, rather than just memorizing the training data.

- Overfitting Prevention: It helps prevent overfitting, where the model performs exceptionally well on the training data but poorly on the test data.

- Realistic Evaluation: It provides a realistic assessment of the model’s performance, revealing how it might behave in real-world applications.

- Problem Identification: It helps identify potential issues that could arise when the model is deployed, allowing for necessary adjustments.

How to Perform Train-Test Splits

In practice, train-test splits involve dividing the dataset into different ratios, commonly 80% training data and 20% test data, or 70% training data and 30% test data.

The larger portion is allocated to the training set to ensure that the model has sufficient data to learn from, while the test set provides enough data for unbiased evaluation.

Methods for Splitting

There are two common methods for performing train-test splits:

- Random Split: This method divides the data without considering the distribution of the target labels. While it is straightforward, it can lead to imbalanced subsets if the overall dataset is imbalanced.

- Stratified Split: This method ensures that the distribution of the target labels is maintained in both the training and test sets, resulting in more balanced subsets.

For time-series data, a sequential split is often used, where the training set contains earlier data points, and the test set includes later ones.

This approach respects the temporal order of the data and is more suitable for time-series analysis.

Implementing Train-Test Splits in Python

To perform a train-test split, you can use Scikit-learn’s train_test_split() function from the model_selection module. Here’s a quick example:

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)Avoiding Common Pitfalls

When performing train-test splits, it is essential to avoid pitfalls such as:

- Data Leakage: Ensure that the training set does not contain information about the test set, as this can lead to over-optimistic performance estimates.

- Reproducibility: Use a fixed random seed during splitting to ensure reproducibility of results.

- Imbalanced Data: For imbalanced datasets, consider using stratified splitting to maintain the distribution of target labels in both subsets.

By being mindful of these pitfalls, you can ensure a well-designed model-training process that includes robust dataset preparation.

Conclusion

Train-test splits are a fundamental technique in machine learning, providing a reliable way to evaluate and improve model performance.

By dividing the dataset into training and testing subsets, we can assess the model’s ability to generalize, prevent overfitting, and identify potential issues before deployment.

Properly implemented train-test splits are essential for building accurate and reliable machine learning models that deliver real-world value.

With this understanding, you are now equipped to perform train-test splits effectively and enhance the accuracy of your machine learning models.

Happy coding! 📊