How to Test for Multicollinearity in R

How to Test for Multicollinearity in R, multicollinearity is a common problem in statistical analyses where two or more predictor variables in a regression model are highly correlated.

This can lead to inaccurate results, particularly when trying to interpret the contribution of each variable to the model.

As such, it is important to test for multicollinearity before running a regression analysis. In this article, we will discuss how to test for multicollinearity in R using inbuilt examples.

One commonly used method for detecting multicollinearity is the Variance Inflation Factor (VIF).

The VIF measures how much the variance of an estimated regression coefficient increases due to multicollinearity in the model.

If the VIF values are larger than 5 or 10, this indicates that the predictor variable may have a multicollinearity problem.

To calculate the VIF in R, we can use the ‘vif’ function in the ‘car’ package.

The following code reads the ‘mtcars’ dataset that is built into R, runs a multiple regression on the data, and then calculates the VIF values for each predictor variable:

How Cloud Computing Improves Workflows in Data Science » Data Science Tutorials

# Load the ‘car’ package

library(car)

# Load the ‘mtcars’ dataset

data(mtcars)

# Fit a multiple regression on the data

fit <- lm(mpg ~ ., mtcars)

# Calculate the VIF values for each predictor variable

vif(fit)

The output will give the VIF value for each predictor variable in the model. The output for the above code is:

cyl disp hp drat wt qsec vs am gear carb 15.373014 21.898751 9.832845 3.697265 15.164116 8.690323 2.322511 1.738381 3.534941 7.908441

From the output, we can see that the predictor variables ‘cyl’, ‘disp’, ‘wt’, and ‘carb’ have VIF values greater than 5, indicating that they may have a multicollinearity problem.

Another commonly used method to test for multicollinearity is the correlation matrix.

We can use the ‘cor’ function in R to obtain the correlation matrix for the predictor variables in the model.

The following code calculates the correlation matrix for the same ‘mtcars’ dataset:

# Calculate the correlation matrix for the predictor variables

cor(mtcars[, 1:7])

The output for the above code is:

cyl disp hp drat wt qsec vs cyl 1.00000000 0.9020329 0.8324475 -0.6999381 0.7824958 -0.5912421 -0.8108118 disp 0.90203289 1.0000000 0.7909486 -0.7102139 0.8879799 -0.4336979 -0.7104159 hp 0.83244749 0.7909486 1.0000000 -0.4487591 0.6587479 -0.7082234 -0.7230967 drat -0.69993811 -0.7102139 -0.4487591 1.0000000 -0.7124406 0.0912054 0.4402785 wt 0.78249584 0.8879799 0.6587479 -0.7124406 1.0000000 -0.1747159 -0.5549157 qsec -0.59124207 -0.4336979 -0.7082234 0.0912054 -0.1747159 1.0000000 0.7445354 vs -0.81081183 -0.7104159 -0.7230967 0.4402785 -0.5549157 0.7445354 1.0000000

From the output, we can see that the predictor variables ‘cyl’, ‘disp’, and ‘hp’ have high correlations with each other. This indicates that they may have a multicollinearity problem.

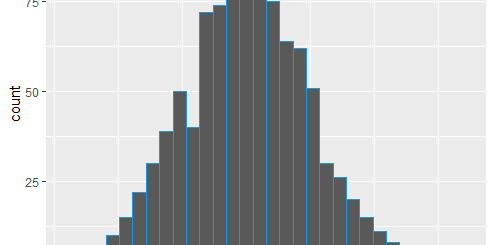

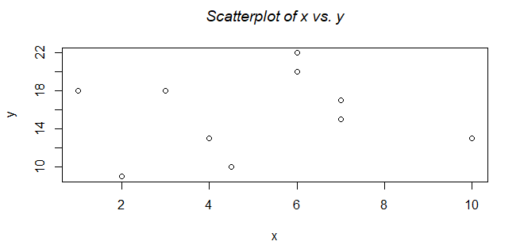

To further investigate the problem, we can create a scatterplot matrix using the ‘pairs’ function in R. The following code creates a scatterplot matrix for the ‘cyl’, ‘disp’, and ‘hp’ variables:

# Create a scatterplot matrix for the predictor variables

pairs(mtcars[, c("cyl", "disp", "hp")])

The output for the above code is a matrix of scatterplots that show the relationships between the three variables.

From the scatterplots, we can see that ‘cyl’ and ‘disp’ have a positive linear relationship, as do ‘disp’ and ‘hp’. This confirms that these variables have a multicollinearity problem.

Conclusion

It is important to test for multicollinearity before running a regression analysis. In R, there are several methods that can be used to test for multicollinearity, including the VIF and correlation matrix methods.

By detecting and addressing multicollinearity, we can ensure that our regression models are accurate and reliable.

Decision tree regression and Classification »

![In plot.window(…) : nonfinite axis limits [GScale(-inf,1,1, .); log=1]](https://finnstats.com/wp-content/themes/hueman/assets/front/img/thumb-medium-empty.png)