One-sample Wilcoxon test in R

One-sample Wilcoxon test in R, Two independent samples are compared using the Mann-Whitney-Wilcoxon test, often known as the Wilcoxon rank sum test or Mann-Whitney U test.

The purpose of this test is to discover whether the measurement variable’s mean differs from a given value (a value that you specify based on your beliefs or a theoretical expectation for example).

The one-sample Wilcoxon test, also known as the one-sample Wilcoxon signed-rank test, is a non-parametric test, which means that it does not depend on data belonging to any specific parametric family of probability distributions, in contrast to the one-sample t-test.

The objective of non-parametric tests is typically the same as that of their parametric equivalents (in this case, compare data to a given value). However, they can handle outliers and Likert scales and do not require the premise of normality.

In this article, we demonstrate the one-sample Wilcoxon test’s application, implementation in R, and interpretation.

We’ll also quickly demonstrate some relevant graphics.

One-sample Wilcoxon test in R

A single sample The Wilcoxon test is used to compare our observations to a default value that has been specified, such as one based on your opinions or an idealised expectation.

In other words, it is used to determine whether a group’s value for the relevant variable differs considerably from that of a known or assumed population value.

The one-sample Wilcoxon test is preferable to a one-sample t-test when the observations do not follow a normal distribution because the test statistic is derived based on the ranks of the difference between the observed values and the default value (making it a non-parametric test).

Summary statistics in R » finnstats

This test’s objective is to determine whether the observations differ considerably from our baseline value.

For a two-tailed test, the null and alternate hypotheses are as follows:

H0: location of the data is equal to the chosen value H1: location of the data is different from the chosen value

In other words, a significant result (i.e., the null hypothesis being rejected) implies that the data’s actual location is different from the selected value.

It’s important to note that some writers claim that this test is a test of the median, which would mean (for a two-tailed test):

H0: the median is equal to the chosen value H1: the median is different from the chosen value

However, this is the case only if the data are symmetric. Without further assumptions about the distribution of the data, the one-sample Wilcoxon test is not a test of the median but a test about the location of the data.

Note that although the normality assumption is not required, the independence assumption must still be verified.

This means that observations must be independent of one another (usually, random sampling is sufficient to have independence).

Data

For our illustration, suppose we want to test whether the scores at an exam differ from 10, that is:

H0: scores at the exam =10 H1: scores at the exam ≠10

We have a sample of 15 students and their exam results to confirm this:

ID<-c(1,2,3,4,5,6,7,8,9,10) score<-c(11,1,12,13,15,15,16,13,12,15) dat<-data.frame(ID,score)

Students’ scores are believed to be independent of one another, meaning they have no bearing on or influence over one another. The independence premise is therefore satisfied.

Training and Testing Data in Machine Learning » finnstats

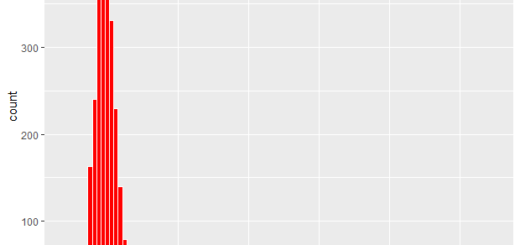

However, the sample size is modest (n 30), and the data do not follow a normal distribution according to the histogram:

hist(dat$Score)

Please take note that we do not do a normality test (such as the Shapiro-Wilk test, for example) to determine whether the data are normal because small sample sizes make normality tests less effective at rejecting the null hypothesis, and as a result, small samples tend to pass normality tests.

Furthermore take note that the one-sample Wilcoxon test is suitable for interval data and Likert scales, despite the fact that we use a quantitative variable for the illustration.

The wilcox.test() function in R can be used to perform the one-sample Wilcoxon test.

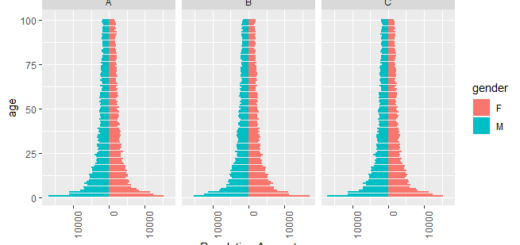

But first, it is a good idea to compute some descriptive statistics to compare our observations with our default value and present our data in a boxplot:

boxplot(dat$score, ylab = "Score")

round(summary(dat$score),digits = 2)

Min. 1st Qu. Median Mean 3rd Qu. Max. 1.0 12.0 13.0 12.3 15.0 16.0

The boxplot and the aforementioned descriptive statistics show that our sample’s mean and median scores are, respectively, 13 and 12.3.

We may determine whether the scores are substantially different from 10 (and, consequently, whether they are different from 10 in the population) by using the one-sample Wilcoxon test:

wilcox.test(dat$score, mu = 10 # default value)

Wilcoxon signed rank test with continuity correction data: dat$score V = 45, p-value = 0.08193 alternative hypothesis: true location is not equal to 10

The output presents several information such as the:

title of the test

data

test statistic

p-value

alternative hypothesis

We focus on the

p-value to interpret and conclude the test.

Interpretation:

We do not reject the null hypothesis in light of the test’s findings (at the significance level of 0.05).

It is performed as a two-tailed test by default. When utilising the alternative = “greater” or alternative = “less parameter in the wilcox.test() function, we can specify that a one-sided test is necessary for the t.test() function.

For example, if we want to test that the scores are higher than 10:

wilcox.test(dat$score, mu = 10, # default value alternative = "greater" # H1: scores > 10)

Wilcoxon signed rank test with continuity correction data: dat$score V = 45, p-value = 0.04097 alternative hypothesis: true location is greater than 10

In this case, we reject the hypothesis that scores are equal to 10 and accept alternate hypothesis.

How to Put margins on tables or arrays in R » finnstats