Simple Linear Regression in R

Simple Linear Regression in R, A straightforward query is resolved by linear regression:

Is it possible to assess the exact connection between one target variable and a group of predictors?

The straight-line model is the simplest probabilistic model.

ŷ = b0 + b1x

where:

- ŷ: The estimated response value

- b0: The regression line intercept

- b1: The regression line slope

Simple Linear Regression in R

The formula is R. The intercept represents a simple linear regression. If x is equal to 0, then y will be equal to the line’s intercept, or 4.77, which is its slope. It reveals how much y changes when x changes.

You utilize a technique known as Ordinary Least Squares to estimate the ideal values of an R Simple Linear Regression Example (OLS).

This approach looks for the parameters that reduce the vertical gap between the expected and actual y-values, or the sum of the squared errors. The mistake word refers to the discrepancy.

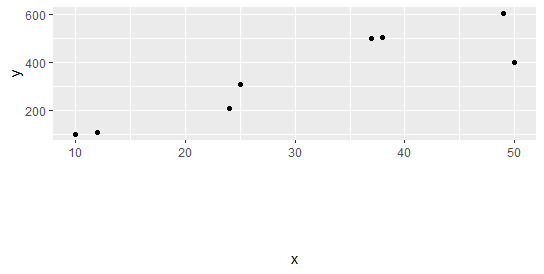

You can plot a scatterplot to see whether a linear relationship between y and x is realistic before estimating the model.

Logistic Regression plot in R »

Scatterplot

To illustrate the idea of simple linear regression, we will use a very straightforward dataset. The average heights and weights of American women will be imported. There are 15 observations in the dataset. You want to determine whether weights and heights are positively connected.

library(ggplot2) height<-c(58,59,60,61,62,63,64,65,66,67,68,69,70,71,72) weight<-c(115,117,120,123,126,129,132,135,139,142,146,150,154,159,164) df<-data.frame(height,weight) df

height weight 1 58 115 2 59 117 3 60 120 4 61 123 5 62 126 6 63 129 7 64 132 8 65 135 9 66 139 10 67 142 11 68 146 12 69 150 13 70 154 14 71 159 15 72 164

ggplot(df,aes(x=height, y = weight))+geom_point()

The scatterplot indicates an overarching tendency for y to rise as x rises. You will gauge how much increases with each addition in the following phase.

The computation of R Simple Linear Regression and Simple Linear Regression in a standard OLS regression is simple. In this tutorial, the objective is not to demonstrate the derivation. Your only writing will be the formula.

Regression Analysis in Statistics »

When estimating a simple linear regression in R, you can utilize the cov(), var(), and mean() functions as well as the regression function.

beta <- cov(df$height, df$weight) / var (df$height) beta [1] 3.45

alpha <- mean(df$weight) - beta * mean(df$height) alpha [1] -87.51667

According to the beta coefficient, the weight rises by 3.45 pounds for every inch of increasing height.

Manually estimating a simple linear equation is not recommended. Regression activities are typically carried out on a large number of estimators.

For detailed information visit Simple Linear Regression in r » Guide »

Have you liked this article? If you could email it to a friend or share it on Facebook, Twitter, or Linked In, we would be eternally grateful.

Please use the like buttons below to show your support. Please remember to share and comment below.