What is the bias variance tradeoff?

What is the bias-variance tradeoff? The bias-variance tradeoff is a crucial idea in supervised machine learning and predictive modeling, regardless of the situation.

There are many supervised machine learning models from which to pick when training a predictive model.

Although there are differences and parallels between each of them, the level of bias and variance is the most important distinction.

You will concentrate on prediction errors when it comes to model predictions. Prediction mistakes of the type known as bias and variance are frequently employed in many different sectors.

How to compare variances in R – Data Science Tutorials

There is a trade-off between limiting bias and variation in the model when it comes to predictive modeling.

You can avoid overfitting and underfitting by building models that are accurate and perform effectively by understanding how these prediction errors function and how they might be used.

What is Bias?

Due to a model’s constrained ability to learn signals from a dataset, bias might skew the results of a model. It is the discrepancy between our model’s average prediction and the actual value that we are attempting to predict.

When a model’s bias is large, it hasn’t done a good job of learning from the training set of data.

Due to the model being oversimplified from not learning anything about the features, data points, etc., this further results in a large inaccuracy in the training and test data.

What is Variance?

When a model employs various sets of the training data set, there are variations in the model called variance. It provides information on the distribution of our data and its sensitivity while utilizing various sets.

When a model has a large variance, it has learned effectively from the training set of data but will struggle to generalize to new or test sets of data.

As a result, the test data will have a high error rate, which will result in overfitting.

So what is the Trade-off?

Finding a happy medium is important when it comes to machine learning models.

Random Forest Machine Learning Introduction – Data Science Tutorials

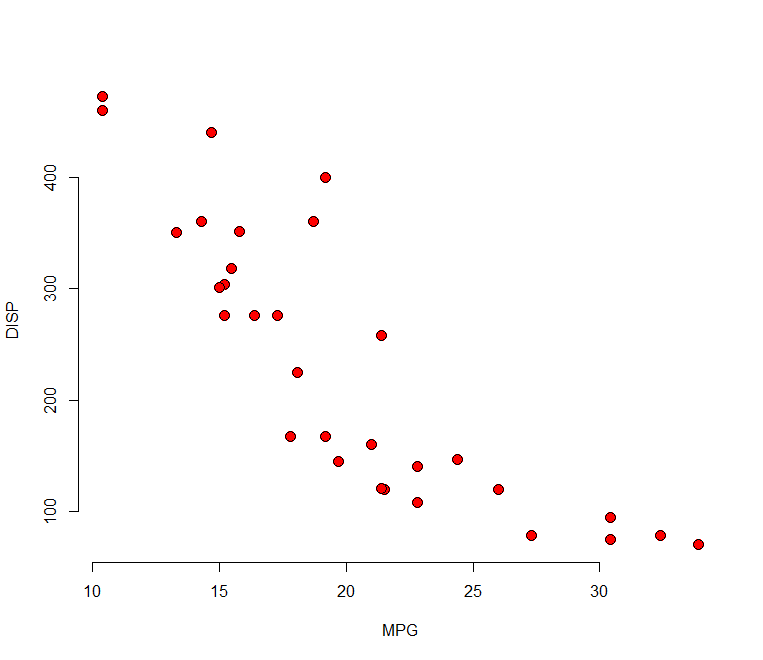

A model’s extreme simplicity may result in significant bias and low variance. A model may have high variance and low bias if there are too many parameters in it.

Our goal is to identify the ideal point when neither overfitting nor underfitting occurs.

A low variance model typically has a simple structure and is less sophisticated, but it runs the risk of being highly biased.

Regression and Naive Bayes are a few examples of these. Underfitting results from the model’s inability to recognize the signals in the data necessary to generate predictions about future data.

A low-bias model typically contains a more flexible structure and is more complicated, but there is a chance of high variance.

These include Nearest Neighbors, Decision Trees, and more as examples. Overfitting occurs when a model is overly complex because it has learned the noise in the data rather than the signals.

Click on this link to learn more about how to stay away from overfitting, signals, and noise.

The Trade-off is used in this situation. To reduce the overall error, we must strike a balance between bias and variance. Now let’s explore Total Error.

The Math behind it

Let’s begin with a straightforward formula where “Y” stands for the variable we are trying to forecast and “X” stands for the other variables. The two’s connection can be described as follows:

The Bias-Variance Trade-off

‘e’ refers to the error term.

This definition of the expected squared error at a position x is:

The Bias-Variance Trade-off

The Bias-Variance Trade-off

This can be defined better into:

Total Error = Bias2 + Variance + Irreducible Error

Data cleansing would help reduce irreducible error, which is the “noise” that cannot be eliminated through modeling.

The Bias-Variance Trade-off

It is crucial to remember that no matter how fantastic your model is, data will always contain a portion of irreducible errors that cannot be eliminated.

Best Books to learn Tensorflow – Data Science Tutorials

Your model will never overfit or underfit after you strike the ideal balance between Bias and Variance.

Conclusion

You should now have a clearer idea of what bias and variance are as well as how they impact predictive modeling.

With a little bit of arithmetic, you will also have a better understanding of the trade-off between the two and why it’s crucial to strike a balance to generate the best-performing model that won’t overfit or underfit.