Curve fitting in R Quick Guide

Curve fitting in R, In this post, we’ll look at how to use the R programming language to fit a curve to a data frame.

One of the most basic aspects of statistical analysis is curve fitting.

It aids in the identification of patterns and data, as well as the prediction of unknown data using a regression model or function.

Dataframe Visualization:

In order to fit a curve to a data frame in R, we must first display the data using a basic scatter plot.

The plot() function in the R language can be used to construct a basic scatter plot.

Syntax:

plot( df$x, df$y)

where,

df: determines the data frame to be used.

x and y: determines the axis variables.

Curve fitting in R

In R, you’ll frequently need to discover the equation that best fits a curve.

The following example shows how to use the poly() function in R to fit curves to data and how to determine which curve best matches the data.

Step 1: Gather data and visualize it

Let’s start by creating a fictitious dataset and then a scatterplot to show it

make a data frame

df <- data.frame(x=1:15, y=c(2, 10, 20, 25, 20, 10, 19, 15, 19, 10, 6, 20, 31, 31, 40)) df

x y 1 1 2 2 2 10 3 3 20 4 4 25 5 5 20 6 6 10 7 7 19 8 8 15 9 9 19 10 10 10 11 11 6 12 12 20 13 13 31 14 14 31 15 15 40

make an x vs. y scatterplot

plot(df$x, df$y, pch=19, xlab='x', ylab='y')

Step 2: Fit Several Curves

Let’s now fit numerous polynomial regression models to the data and exhibit each model’s curve in a single plot:

up to degree 5 polynomial regression models

fit1 <- lm(y~x, data=df) fit2 <- lm(y~poly(x,2,raw=TRUE), data=df) fit3 <- lm(y~poly(x,3,raw=TRUE), data=df) fit4 <- lm(y~poly(x,4,raw=TRUE), data=df) fit5 <- lm(y~poly(x,5,raw=TRUE), data=df)

make an x vs. y scatterplot

plot(df$x, df$y, pch=19, xlab='x', ylab='y')

define x-axis values

xaxis <- seq(1, 15, length=15)

add each model’s curve to the plot

lines(xaxis, predict(fit1, data.frame(x=xaxis)), col='green') lines(xaxis, predict(fit2, data.frame(x=xaxis)), col='red') lines(xaxis, predict(fit3, data.frame(x=xaxis)), col='blue') lines(xaxis, predict(fit4, data.frame(x=xaxis)), col='pink') lines(xaxis, predict(fit5, data.frame(x=xaxis)), col='yellow')

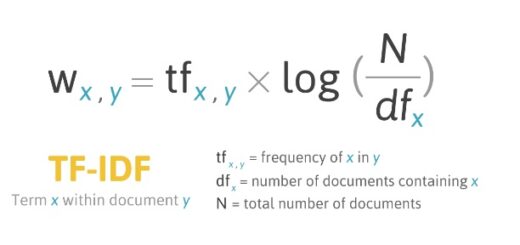

The modified R-squared of each model can be used to determine which curve best matches the data.

This score indicates how much of the variation in the response variable can be explained by the model’s predictor variables, adjusted for the number of predictor variables.

calculated each model’s adjusted R-squared

summary(fit1)$adj.r.squared summary(fit2)$adj.r.squared summary(fit3)$adj.r.squared summary(fit4)$adj.r.squared summary(fit5)$adj.r.squared

[1] 0.3078264 [1] 0.3414538 [1] 0.7168905 [1] 0.7362472 [1] 0.711871

We can see from the output that the fourth-degree polynomial has the greatest adjusted R-squared, with an adjusted R-squared of 0.7362472.

Step 3: Create a mental image of the final curve

Finally, we can make a scatterplot with the fourth-degree polynomial model’s curve.

Goodness of Fit Test- Jarque-Bera Test in R » finnstats

make an x vs. y scatterplot

plot(df$x, df$y, pch=19, xlab='x', ylab='y')

define x-axis values

xaxis <- seq(1, 15, length=15)

lines(xaxis, predict(fit4, data.frame(x=xaxis)), col=’blue’)

The summary() method can also be used to obtain the equation for this line.

summary(fit4)

Call:

lm(formula = y ~ poly(x, 4, raw = TRUE), data = df)

Residuals: Min 1Q Median 3Q Max -8.848 -1.974 0.902 2.599 6.836 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) -18.717949 10.779889 -1.736 0.1131 poly(x, 4, raw = TRUE)1 24.131130 8.679703 2.780 0.0194 * poly(x, 4, raw = TRUE)2 -4.832890 2.109754 -2.291 0.0450 * poly(x, 4, raw = TRUE)3 0.354756 0.195173 1.818 0.0992 . poly(x, 4, raw = TRUE)4 -0.008147 0.006060 -1.344 0.2085 --- Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Residual standard error: 5.283 on 10 degrees of freedom Multiple R-squared: 0.8116, Adjusted R-squared: 0.7362 F-statistic: 10.77 on 4 and 10 DF, p-value: 0.0012

Based on the predictor variables in the model, we can use the above summary to forecast the value of the response variable.