Predictive Analytics Models in R

Predictive analytics models are crucial in the fields of data science and business analytics, and they have a big impact on a lot of different business operations.

Depending on the data amount in terms of observations and variables, building such models is frequently a fairly iterative process involving many trials.

The most typical issues that predictive models may answer fall into the categories of regression and classification, and the techniques that can be used include least squares regression, logistic regression, tree-based models, neural networks, and support vector machines, among others.

How to calculate Scheffe’s Test in R » finnstats

To obtain a firm grasp on the underlying principles, it is recommended to perform all iterations one by one in the model development process.

After gaining some experience, it’s likely that greater automation will be considered as a replacement for model iterations.

A tutorial on how to use the caret package in R

The R package “caret” was created expressly to address this problem and includes a number of built-in generalized functions that may be used with any modeling technique.

Let’s have a look at some of the most helpful “caret” package functions using “mtcars” data and a basic linear regression model.

This article will concentrate on how the various “caret” package functions work together to develop predictive models, rather than on how to analyze model outputs or generate business insights.

Binomial Distribution in R-Quick Guide » finnstats

Data Loading and Splitting: For this example project, we’ll utilize R’s built-in dataset “mtcars.” One of the first things to do after loading the data is to separate it into development and validation samples.

The “createDataPartition” function in the “caret” package can be used to easily partition data.

Running the below function in the R console will provide you access to the syntax and other parameters supported by this function.

Predictive Analytics Models in R

Let’s say the development sample has 80% of the observations in the “mtcars” data, while the validation sample contains the remaining observations.

library(caret) library(datasets) data(mtcars) split<-createDataPartition(y = mtcars$mpg, p = 0.6, list = FALSE) dev<-mtcars[split,] val<-mtcars[-split,]

Model Construction and Tuning

The train function can be used to estimate coefficient values for a variety of modeling functions, such as a random forest.

This function creates a tuning parameter grid and can also calculate resampling-based performance metrics.

Log Rank Test in R-Survival Curve Comparison » finnstats

Complement Predictive Models

Let’s design a linear regression model using the least-squares approach to get the best parameters for the provided data in this case.

The following R script demonstrates the syntax required to create a single model with all variables introduced.

lmFit<-train(mpg~., data = dev, method = "lm") summary(lmFit)

Call: lm(formula = .outcome ~ ., data = dat) Residuals: Min 1Q Median 3Q Max -4.1664 -1.1067 -0.0082 0.6046 4.0031 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) -18.328414 26.478865 -0.692 0.505 cyl 1.836029 1.436573 1.278 0.230 disp 0.006855 0.019615 0.350 0.734 hp 0.005892 0.030608 0.192 0.851 drat 4.268775 2.606038 1.638 0.132 wt -2.257053 2.056306 -1.098 0.298 qsec 0.625425 0.892208 0.701 0.499 vs 2.008430 2.399231 0.837 0.422 am 3.428402 2.702250 1.269 0.233 gear 2.657064 1.925109 1.380 0.198 carb -2.240357 1.262887 -1.774 0.106 Residual standard error: 2.419 on 10 degrees of freedom Multiple R-squared: 0.9169, Adjusted R-squared: 0.8337 F-statistic: 11.03 on 10 and 10 DF, p-value: 0.0003761

Simply replace the relevant model name in the method parameter of the “train” function to utilize a different modeling function.

For example, the method “glm” will be used for logistic regression models, whereas “rf” will be used for random forest models, and so on.

In most cases, model creation will not be completed in a single iteration and will require further trials.

This can be accomplished by utilizing the “expand.grid” function, which is especially handy with complex models such as random forests, neural networks, and support vector machines.

Self Organizing Maps in R- Supervised Vs Unsupervised » finnstats

Construct a Data Frame

Another useful function is “trainControl,” which enables parameter coefficient estimation using resampling approaches such as cross-validation and boosting.

When these parameters are applied, the complete data set can be used for model development without having to partition it.

The script below demonstrates how to utilize cross-validation and how to apply it to loaded data using the “trainControl” function.

ctrl<-trainControl(method = “cv”,number = 10) lmCVFit<-train(mpg ~ ., data = mtcars, method = “lm”, trControl = ctrl, metric=”Rsquared”) summary(lmCVFit)

Call: lm(formula = .outcome ~ ., data = dat) Residuals: Min 1Q Median 3Q Max -3.4506 -1.6044 -0.1196 1.2193 4.6271 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 12.30337 18.71788 0.657 0.5181 cyl -0.11144 1.04502 -0.107 0.9161 disp 0.01334 0.01786 0.747 0.4635 hp -0.02148 0.02177 -0.987 0.3350 drat 0.78711 1.63537 0.481 0.6353 wt -3.71530 1.89441 -1.961 0.0633 . qsec 0.82104 0.73084 1.123 0.2739 vs 0.31776 2.10451 0.151 0.8814 am 2.52023 2.05665 1.225 0.2340 gear 0.65541 1.49326 0.439 0.6652 carb -0.19942 0.82875 -0.241 0.8122 --- Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Residual standard error: 2.65 on 21 degrees of freedom Multiple R-squared: 0.869, Adjusted R-squared: 0.8066 F-statistic: 13.93 on 10 and 21 DF, p-value: 3.793e-07

Model Diagnostics and Scoring

After identifying the final model, the next step is to compute model diagnostics, which vary depending on the modeling technique utilized.

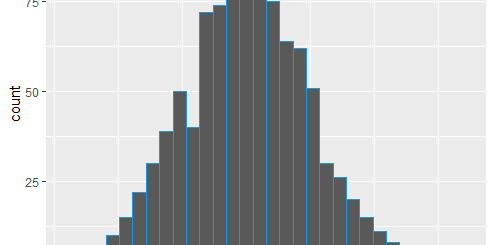

The usual diagnostics tests for linear regression models include residual plots, multicollinearity checks, and plots of actual vs projected values.

AUC value, classification table, gains chart, and so on would all be different for a logistic regression model.

Which Degree is Better for Data Science, MS or Ph.D.? » finnstats

The script below shows how to visualize residual values vs. actual values and anticipated values vs. actual values using R syntax.

residuals<-resid(lmFit) predictedValues<-predict(lmFit) plot(dev$mpg,residuals) abline(0,0) plot(dev$mpg,predictedValues)

“varImp,” which displays the variable significance of the variables included in the final model, is one of the most useful functions.

varImp(lmFit) plot(varImp(lmFit))

Finally, using the parameter estimates produced throughout the model construction process, scoring should be done on the validation sample or any additional fresh data.

Stock Market Predictions Next Week » finnstats

So far, we’ve looked at some of the important functions included in the “caret” package for creating predictive models in R.

This package implements a broad class of built-in functions that can be utilized across all modeling methodologies, as we’ve shown.